Issue #41: Holy AI, Batman!

Howdy Bob, every once in a while, a new AI tool pops on the scene that is so good and so amazing that you have to pick your jaw off the floor after using it. I caught wind of a new music generation tool named Suno AI from an article on TechCrunch. I helped produce AI in A-minor, a first-of-its-kind music event where Mindgrub composed original AI music in the style of Mozart and Philip Glass performed by the amazing Baltimore Symphony Orchestra. After learning tons of AI music models, I consider myself pretty up to date on the latest AI music tools.

I knew nothing of Suno, and Holy AI, Batman! Suno AI is the best and most fun tool I’ve used in a very long time. What’s amazing is its ability to create music in any popular style you want, but it also generates lyrics that are so amazing and on point. Go try it out; it’s worth it. I’ll be here when you get back.

Now that I’ve played with this thing for hours, generating funny songs about my kids, romantic taco pop songs, and happy songs for our clients, I had to make an album to showcase all of this. Feel free to listen while you read. But these are some of the standouts, with details on the prompts I used to make these bops.

Taco Love

Generated with Suno using the prompt: “Tacos are delcious and I like eating them as a romantic R&B song.”

Technological Rhapsody

Generated with Suno using the prompt “Male vocalist ’80s pop about Jason Perry’s ‘Thoughts on Tech & Things’ newsletter covering emerging tech and AI. Catchy lyrics on insider insights. Electric beats throughout”

Taco Love the Remix

Generated with Suno using the prompt: “Tacos are delcious and I like eating them as a romantic R&B song.”

Mindgrub – The Future is Now

Generated with Suno using the prompt “Get your technical agency or creative consulting services from Mindgrub as a 90’s style ad jingle that ends with it ending with you’ll know we do AI to.”

After a few days of listening to and creating all types of music, I started to wonder, as a person in the agency space, how far I could get in creating a series of social media ads that are fully AI-generated but assembled and edited by my hands.

My idea started with creating a series of short Instagram video ads, each promoting my newsletter, AI workshops, and, of course, reminding people I’m an expert in the AI space. I wanted to limit each of these ads to 30-45 seconds and stitch them together so they followed a theme.

I knew Suno was perfect for music, and as a backup, I could use Google MusicFX to make ambient background music. As for the other tools for video generation, after a few weeks of comparing AI video generators, something I touched on in a newsletter two weeks ago, I picked RunwayML. Knowing each video runs 4 seconds on default, I splurged for a paid plan that allows unlimited video generation. For the scripts, I turned to Anthropic’s new Claude 3 and provided it with a very long and detailed prompt that explained the concept, gave a description of my personal brand and products, and then asked it to generate text prompts for a text-to-audio AI generator and multiple scenes of scenery and people, with some designed to look like people I know. I also asked it to suggest text for the intro screens on social media.

Since I paid for the top RunwayML plan, I decided to test and train its AI model with about 26 images of me, allowing it to generate originals from my photos. I used some of these images that I generated with prompts from Claude to also create reference images for RunwayML to transform into video. You can see more of these in the end product, but these are some samples to give you an idea:

AI-trained image model in runwayML generated with the prompt: “Jason in an album cover of him lying down with a knee up resting on his elbow looking into the camera in an 80’s style rock outfit.”

AI-trained image model in runwayML generated with the prompt: “Jason in an album cover of him lying down with a knee up resting on his elbow looking into the camera in an 80’s style rock outfit.”

AI-trained image model in runwayML generated with the prompt: “Jason is a muscular 80’s style cartoon character in front of a castle holding a powerful sword.”

AI-trained image model in runwayML generated with the prompt: “Jason is a cyborg character flying over a futuristic version of New Orleans.”

AI-trained image model in runwayML generated with the prompt: “Jason is a black Samurai in a neo-tokyo futuristic version of New Orleans in anime style.”

Once you have these images, runwayML will allow you to seed its text-to-video generator with an initial image and provide a prompt that explains how the video should move. For example, this is an initial image I generated from Claude’s recommended prompt:

AI-trained image model in runwayML generated with the prompt: “Close-up of Jason’s face with a vibrant, electric glow, surrounded by floating telephone receivers and ’80s-style geometric patterns.”

I took this generated image and fed it to the video model, providing it with a description of how I wanted the video to animate – this often took some rephrasing of the prompt and the use of a motion blur tool to select what should move and what should not. Once generated, I could extend the video 4 seconds at a time – thought sometimes the additional seconds took an unexpected turn.

AI generated video with runwayML with the prompt: “The 80’s style head stays the same, with the vector objects move very, very fast behind him.” extended to 14 seconds.

I needed to fill in some gaps between music prompts with a script generated by Claude. I took the text and used OpenAI’s Whisper engine, which converts text to speech. You can try it out on my playground. For the voice, I chose Onyx and the HD option. The speed of the voice spoke was frustrating – but for my needs I felt like the voice had the right tone.

With all the raw ingredients generated, I took this content, assembled them with some image editing in Photomator Pro (I did a VERY quick job adding AI-generated album covers to AI-generated pictures of tables against backdrops), and created original AI-generated audio, video, and text that I pulled into Adobe Premiere Pro to edit with mostly default audio and video transitions. The results speak for themselves:

This is a concept for an old-school music infomercial of the albums promoting the newsletter, workshops, and consulting services from an AI expert. That first song is a chart-topper!

This was a super fun exploration into what’s possible – and I leaned into the fun and corniness. Now, on to my thoughts on tech & things:

️⚡️The European Union has opened an investigation into potential non-compliance with the Digital Markets Act (DMA) by Google, Apple, and Meta. Observing the situation unfold has been fascinating, but it seems that we’re reaching a point where the act’s provisions and the EU’s expectations for company responses are coming into conflict. I find myself agreeing with Gruber’s sentiment: “The European Commission is edging closer and closer to saying that successful platforms have no right to monetize their intellectual property on those platforms.”

⚡️ ️The Department of Justice’s decision to file an antitrust case against Apple, labeling the company as a monopoly, is a significant development. This case raises important questions about what constitutes a feature and what defines an illegal monopoly. As an Apple enthusiast, I understand your appreciation for the seamless integration and user experience that Apple’s ecosystem provides. Features like AirPlay, iMessage, and continuity between devices are undeniably convenient and enhance the overall user experience. The outcome of the DOJ’s antitrust case against Apple will have significant implications for the tech industry as a whole. It will be interesting to see how the court interprets the boundaries between features, ecosystem lock-in, and anticompetitive practices.

During this whole process, I found myself amazed and equally frustrated. RunwayML is an amazing tool, but when it generates something right, it’s incredible; when it misses, it’s so frustrating. I think what’s toughest about RunwayML, and really any image or video generator is that editing the prompt starts you all over again.

When I started, I hoped I could use the same images to generate videos for a 16:9 and 9:16 display – or, said differently, horizontal video for TV and laptops and portrait video for phones or social apps like Instagram. Thing is you had to create these images separately, and the reference image for a video needed to already be that size. This drove me insane – as you find something you like you want to keep using it, but of course each is original so even with the exact prompt you couldn’t catch that magic again.

More features are coming that take initial image prompts to generate new images or allow you to better define a character, so I have no question it will get better – but it is an issue.

All of these tools feel so close. Of course, if you need snippets to add to existing videos rather than something more ambitious like a fully AI-generated ad, this tool is more than ready to shine.

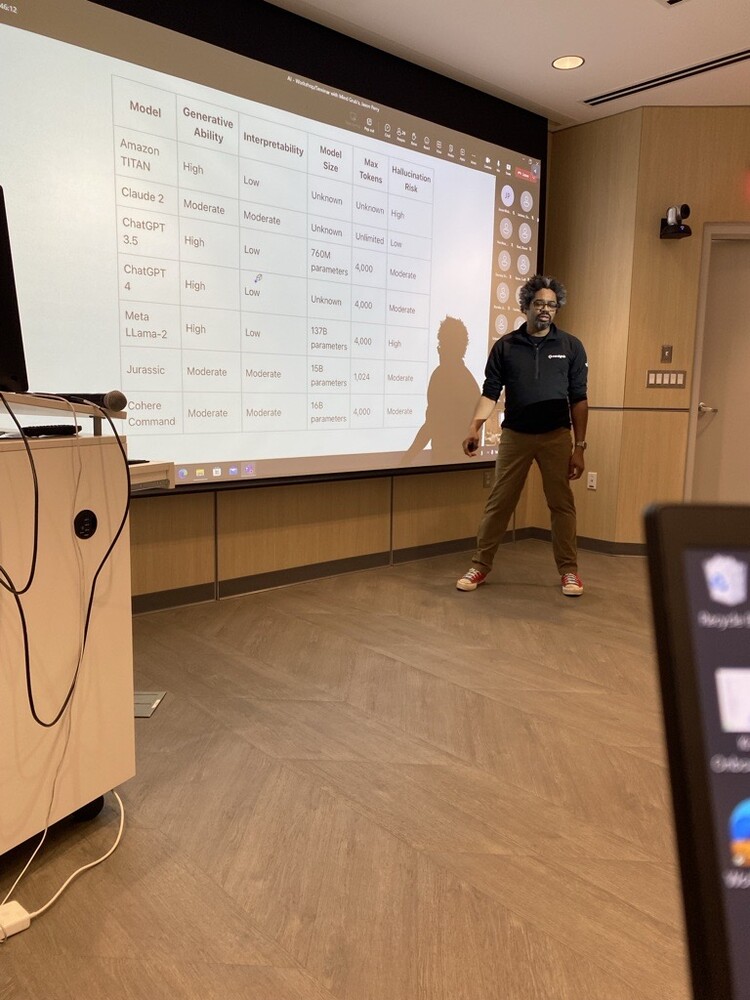

The tools featured in this newsletter issue show off in my new AI workshop, which starts with an introduction to AI and how it works. The workshop then moves through a tour of the different AI tools I love, with quick walkthroughs and demos on how to use them. If you are learning about AI for the first time or want to get a group smart quickly, reach out. I just delivered this as a private course to the happy team at Prometric last week.

I’m rolling out public versions of these courses online and in person on weekends. Take a look and consider signing up for one of these great classes.

-jason

p.s. I know space is filled with tons and tons of satellites, but this real-time tracker of SpaceX’s thousands of Starlink satellites rotating around Earth blew my mind. Check it out.