Issue #39: Exploring the World of Text-to-Video AI Models

Howdy 👋🏾, last month, Tyler Perry announced he was pulling back from his $800 million investment in a new film studio in Atlanta. This decision came after he laid eyes on OpenAI’s new AI diffusion model, Sora, which can create video from a text prompt. If you haven’t already, check out Sora’s videos; they’re amazing. Unfortunately, Sora is quite locked down, with access limited to a select audience, and plans for greater availability later this year (if you know someone, I would love access). However, it’s far from the only option, so this week, I thought I would give you a tour of some of the text-to-video AI models and how they work.

As a refresher, ChatGPT and most text-generative AI models are Large Language Models, which work as a very complex auto-suggest, guessing the next word or token in a phrase based on its training data. Most image models are diffusion models that diffuse or break up parts of an image bit by bit to understand its elements better. They then use that information to understand an image’s elements and use that data to generate new images or artwork. Stable Diffusion puts it right in the name, but Midjourney and DALL-E are also examples of diffusion models.

To date, most text-to-video models are limited to creating about 1 minute of video, as shown in Sora’s technical report comparing the differences between videos generated with different levels of compute power. Many of these models can also start with an image and transform it into a video or an animation, or extend an existing video to finish or complete it.

RunwayML

RunwayML’s new Gen-2 AI model allows you to create videos from a text prompt, extend an existing video, or use an existing image. I set up a free account that limits me to a handful of attempts and 4 seconds of generated video.

I asked RunwayML if it could create a claymation video of a black man with large hair asking you to please subscribe to his newsletter, and it did not disappoint.

I grabbed a photo from Equitech Tuesdays at Guilford Hall Brewery by photographer Ian Harpool to check out how well it adds motion to existing images. First, let me show you the original photo:

This is the video generated from that photo:

Pika

Pika has some extra abilities, including the power to dynamically add sound effects to your generated videos, take an existing image or video, and sync the person’s mouth to an audio clip. Pika also supports the same features as RunwayML, such as video from prompts, video from existing images, and the ability to extend an existing image. Some of these features require a paid account, but you can get a feel for the model’s abilities using the free plans.

I also asked Pika to generate a video of a 3D closeup of a black man with large hair frantically telling people that the M&M vending machines are spying on us (You’ll understand when you get to the p.s.).

I asked Pika to convert that same existing image to video, and it came back with this:

Of course, these are early days, and plenty of video-generating AI models are getting closer to show time. Stability AI, makers of Stable Diffusion, released an early version of Stable Video – an open model you can download and stand up on your own hardware. I’ve personally dabbled with a handful of video models on Huggingface that show tons of possibility. These things are getting better and better. Now, my thoughts on tech & things:

⚡️I believe that the quality of Google Search has been declining for years. However, combining AI and large media organizations employing extensive SEO tactics to sell affiliate products has taken a significant toll, making Google Search quite subpar. I suspect that Google is feeling the pressure from newer AI search upstarts like Perplexity AI and has finally taken steps to weed out the worst offenders.

⚡️Anthropic recently released a new family of AI models and compared them to OpenAI’s ChatGPT 4, but what does that really mean? To date, many AI companies have declared the greatness of their models based on how well they can pass medical exams or the bar. However, that’s not how we use these AI assistants in our daily lives. I want to know if an AI can tell me how much water to use for long-grain rice versus jasmine rice or if it can check my estimates on conversion rates. Techcrunch has a great piece on why AI benchmarks seem to tell us so little when it comes to our everyday use of AI models.

⚡️Apple’s reactions to the EU’s DMA (Digital Markets Act) have felt uncharacteristic for the company, which seems to have done everything it can to meet the letter of the law and nothing more. The most recent blip was Apple’s sudden banning of Epic Sweden from opening its own App Store marketplace in the EU, followed by Apple quickly reinstating it. John Gruber has a good write-up of what happened.

⚡️A company at SXSW used generative AI to bring Marilyn Monroe to life through an interactive bot that mimics her voice, emotions, and physical reactions. The world of digital avatars continues to feel like the uncanny valley but it also opens the door for some truly interesting possibilities.

⚡️After last year’s tumultuous events at OpenAI, which saw Sam Altman fired, two CEOs hired, and Sam Altman rehired, the company finally completed its investigation, returning Sam Altman to OpenAI’s board. The whole saga has been fascinating to watch, and now, Elon Musk – who donated millions to help start OpenAI – is suing the company. This has led to a rather public exchange of words and the public sharing of past emails on OpenAI’s website.

This week, I had a great time delivering an introductory AI talk to the Tristate HR Association at Rowan College in Southern New Jersey. I walked the group through how AI works and its history. I also offered some interactive demos of creating chatbots using OpenAI’s assistants that can answer questions about HR policy from employee handbooks or API calls to HRIS (Human Resources Information Systems).

I have more of these talks lined up and would love to speak at your next event or conference or craft a private talk on how your organization can use AI. I’m also working to launch in-person and online half-day workshops, so keep your eyes open or check out my website for more information and availability.

-jason

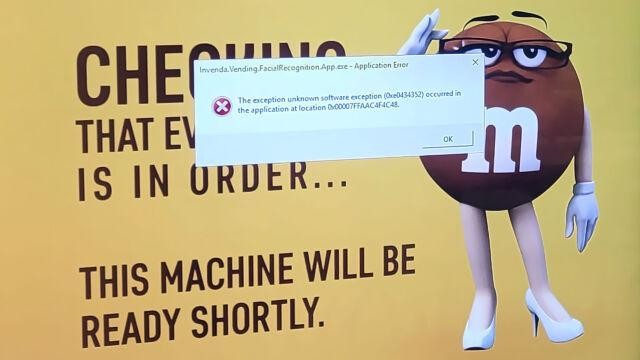

p.s. A facial recognition-equipped M&M vending machine on a college campus was discovered thanks to an error message. The company uses it to understand buyer demographics better and determine repeat visitors, but it seems unnecessary.