Latest Thoughts

-

🧠 Funny Timing, Right?

You know what’s a strange coincidence?

This week, both OpenAI and Perplexity announced new web browsers, bold moves that could reshape how we interface with the internet. On the exact same day, Ars Technica published a story about browser extensions quietly turning nearly a million people’s browsers into scraping bots.

And it got me thinking…

Owning the browser is a convenient way to bypass a lot of the safeguards platforms like Cloudflare have put up to stop scraping and bot traffic. Maybe that’s not the intention, but sure is a funny coincidence.

-

🧠 What Even Is AGI?

In my AI talks, I often reference OpenAI CTO Mira Murati’s 5 steps toward AGI. I like her framing, not just because it emphasizes thinking and reasoning, but because it anchors AGI in action.

AGI isn’t just about answering questions. It’s AI that can manage tasks, run systems, and ultimately operate and orchestrate an organization, made up of both humans and machines.

That’s the vision. But defining what counts as AGI? That’s still a moving target.

Even the leaders at the top AI labs can’t agree on what the finish line looks like. Is it general reasoning across any domain? Is it autonomy? Is it the ability to pursue goals without human prompting?

Ars Technica has a great deep dive on that exact question: What actually is this thing we’re racing toward, and how will we know when we’ve built it?

-

🧠 Follow the Money. AI Is Already Paying Off.

I mentioned in a recent post that more and more CEOs are starting to warn of smaller teams and reduced hiring needs as they see the success of AI. It’s early in the transition, and that means use cases and hard metrics are still trickling in.

But Microsoft’s Chief Commercial Officer just stepped out to offer some proof:

“Microsoft saved over $500 million in its call centers this past year by using AI.”

The real power of AI, especially agentic AI, isn’t just chatbots or flashy demos. It’s giving software the ability to act on your data. To do things your experts would normally do. At scale.

This space is still early, but the direction is clear. Microsoft, Meta, Google, and Salesforce are baking agents into everything, from support tools to software development to customer outreach. And it’s working.

The upside? Massive efficiency, better performance, and tools that extend your best people.

-

🧠 CEOs Are Finally Saying the Quiet Part Out Loud: AI Means Smaller Teams

It started with Andy Jassy warning investors that Amazon would become more efficient by using AI to reduce manual effort. But now, more CEOs are saying the quiet part out loud: AI is enabling smaller teams and leaner companies.

This isn’t theoretical. It’s already underway.

In the past year, we’ve seen a clear trend: rising layoffs across the tech sector, especially in management, operations, and recruiting roles. The message across these moves has been consistent, cut layers, flatten orgs, and use AI to close the gap.

From Microsoft:

“We continue to implement organizational changes necessary to best position the company and teams for success in a dynamic marketplace… focused on reducing layers with fewer managers and streamlining processes, products, procedures, and roles to become more efficient.”

From Meta:

“Zuckerberg has stated that Meta is developing AI systems to replace mid-level engineers… By 2025, he expects Meta to have AI that can function as a ‘midlevel engineer,’ writing code, handling software development, and replacing human roles.”

Google has “thinned out” recruiters and admins, explicitly citing AI tools. Duolingo laid off portions of its translation and language staff in early 2024 after aggressively shifting to AI for core product features.

This trend is especially visible in tech because these companies are building the very tools driving the shift. They see the impact first, and are adjusting accordingly. But this won’t stop at software firms. AI is reshaping workflows and org design across every sector.

In my book, I call this the rise of “vibe teams”, small, empowered units supported by AI agents that amplify productivity far beyond traditional headcount. This model isn’t aspirational. It’s becoming operational reality.

For anyone outside the tech industry, this should read as a warning. We’re watching the early adopters recalibrate, and what follows will be a broader redefinition of roles, team structures, and management itself.

Harvard Business Review recently published a powerful piece that underscores the urgency: the manager’s role is changing. Traditional org structures no longer make sense when AI can scale a team, and organizations become flatter.

Nvidia’s CEO summed it up well:

“It’s not AI that will take your job, but someone using AI that will.”

And the best time to start adapting is now.

-

🧠 Cloudflare just entered the AI monetization chat.

One of the core tensions with SEO and now AEO (AI Engine Optimization) is access. If you block bots from crawling your content, you lose visibility. But if you don’t block them, your content gets scraped, summarized, and served elsewhere without credit, clicks, or revenue.

For publishers like Reddit, recipe sites, and newsrooms, that’s not just a tech issue, it’s an existential one. Tools like Perplexity and ChatGPT summarize entire pages, cutting publishers out of the traffic (and ad revenue) loop.

Now Cloudflare’s testing a new play: charge the bots. Their private beta lets sites meter and monetize how AI tools crawl their content. It’s early, but it signals a bigger shift. The market’s looking for a middle ground between “open” and “owned.” And the real question is—who gets paid when AI learns from your work?

-

🧠 Claude Tried to Run a Business. It Got Weird.

Anthropic and a Andon Labs ran an experiment with an AI agent named Claudius. Could Claudius run a snack shop inside a company break room?

The store was modest, a fridge, baskets, and an iPad for self-checkout, but the business was real with actual cash at stake. Claudius was also given real tools, notes pads to manage inventory and finances, access to email to talk with suppliers, a web browser to do research, and the companies slack to interact with employees. For things the agent could not do it relied on physical employees for things like restocking.

On the path to AGI, this is an early test of Level 5 on OpenAI’s AGI roadmap, the point where AI becomes an organizer, capable of managing people, tools, and systems like a CEO. As a refresher, OpenAI’s former CTO laid out five levels on the road to AGI:

- Recall

- Reasoning

- Acting (agents/tools)

- Teaching

- Organizing (aka boss-mode)

Right now, most models live between Level 2 and 3, they can recall information, reason through problems, and complete some tasks with tools.

So, how did it go?

Anthropic concedes, it “would not hire Claudius”. So shop owners can breathe easy for now.

To be fair Claudius was not a complete failure. It found suppliers, but as the great writeup explores it hallucinated conversations, often failed to negotiate profit margins, and was easily convinced into giving deep discount codes or products for free.

Check out the full article, its a worthy read. -

🧠 Vibe Teams Are the Future

There’s a chapter in The AI Evolution that I keep coming back to, Vibe Teams. It’s the idea that small, high-trust teams can do big things when paired with AI and the right tools. And lately, it feels less like a prediction and more like a playbook for what’s already happening.

Salesforce says up to 50% of their team’s work is now handled by AI agents and tools. Amazon’s CEO Andy Jassy predicts the company will only get smaller as AI becomes a force multiplier. The message? Big companies are reorganizing around smaller teams that move faster, think smarter, and leverage AI to punch way above their weight.

I call AI the great equalizer for a reason. In my workshops, I’ve seen firsthand how a small business with the right AI setup can compete with a team 10x its size.

We’re entering a new era where small teams don’t just survive, they thrive. They launch faster, personalize better, and operate with precision because they let AI handle the grunt work while they focus on the magic. That’s what a Vibe Team is: focused, fluid, and augmented.

-

🧠 Catch me on WYPR Midday

Thank you to the Midday team at WYPR for inviting me to talk.

I joined Dr. Anupam Joshi to talk with guest host Farai Chideya about how AI is reshaping the workplace, not just in tech, but across every industry. We covered what skills matter most now, how AI is changing the job search and hiring process, and what Maryland is doing on the policy front.

We also talked about how to get started with AI, even if you’re not technical, and how people at every stage of their career can adapt and grow. Checkout the link to the full episode linked below.

-

What the Heck Is MCP?

AI models are built on data. All that data, meticulously scraped and refined, fuels their capacity to handle a staggering spectrum of questions. But as many of you know, their knowledge is locked to the moment they were trained. ChatGPT 3.5, for instance, was famously unaware of the pandemic. Not because it was dumb, but because it wasn’t trained on anything post-2021.

That limitation hasn’t disappeared. Even the newest models don’t magically know what happened yesterday, unless they’re connected to live data. And that’s where techniques like RAG (Retrieval-Augmented Generation) come in. RAG allows an AI to pause mid-response, reach out to external sources like today’s weather report or last night’s playoff score, and bring that data back into the conversation. It’s like giving the model a search engine it can use on the fly.

But RAG has limits. It’s focused on data capture, not doing things. It can help you find an answer, but it can’t carry out a task. And its usefulness is gated by whatever systems your team has wired up behind the scenes. If there’s no integration, there’s no retrieval. It’s useful, but it’s not agentic.

Enter MCP

MCP stands for Model Context Protocol, and it’s an open protocol developed by Anthropic, the team behind Claude. It’s not yet the de facto standard, but it’s gaining real momentum. Microsoft and Google are all in, and OpenAI seems on board. Anthropic hopes that MCP could become the “USB-C” of AI agents, a universal interface for how models connect to tools, data, and services.

What makes MCP powerful isn’t just that it can fetch information. It’s that it can also perform actions. Think of it like this: RAG might retrieve the name of a file. MCP can open that file, edit it, and return a modified version, all without you lifting a finger.

It’s also stateful, meaning it can remember context across multiple requests. For developers, this solves a long-standing web problem. Traditional web requests are like goldfish; they forget everything after each interaction. Web apps have spent years duct-taping state management around that limitation. But MCP is designed to remember. It lets an AI agent maintain a thread of interaction, which means it can build on past knowledge, respond more intelligently, and chain tasks together with nuance.

At Microsoft Build, one demo showed an AI agent using MCP to remove a background from an image. The agent didn’t just describe how to do it or explain how a user might remove a background; it called Microsoft Paint, passed in the image, triggered the action, and received back a new file with the background removed.

MCP enables agents to access the headless interfaces of applications, with platforms like Figma and Slack now exposing their functionality through standardized MCP servers. So, instead of relying on fragile screen-scraping or rigid APIs, agents can now dynamically discover available tools, interpret their functions, and use them in real time.

That’s the holy grail for agentic AI: tools that are discoverable, executable, and composable. You’re not just talking to a chatbot. You’re building a workforce of autonomous agents capable of navigating complex workflows with minimal oversight.

Imagine asking an agent to let a friend know you’re running late – with MCP, the agent can identify apps like email or WhatsApp that support the protocol, and communicate with them directly to get the job done. More complex examples could involve an agent creating design assets in an application such as Figma and then exporting assets into a developer application like Visual Studio Code to implement a website. The possibilities are endless.

The other win? Security. MCP includes built-in authentication and access control. That means you can decide who gets to use what, and under what conditions. Unlike custom tool integrations or API gateways, MCP is designed with enterprise-grade safeguards from the start. That makes it viable not just for tinkerers but for businesses that need guardrails, audit logs, and role-based permissions.

Right now, most MCP interfaces run locally. That’s partly by design; local agents can interact with desktop tools in ways cloud models can’t. But we’re already seeing movement toward the web. Microsoft is embedding MCP deeper into Windows, and other companies are exploring ways to expose cloud services using the same model. If you’ve built RPA (Robotic Process Automation) systems before, this is like giving your bots superpowers and letting them coordinate with AI agents that actually understand what they’re doing.

If you download Claude Desktop and have a paid Anthropic account, you can start experimenting with MCP right now. Many developers have shared example projects that talk to apps like Slack, Notion, and Figma. As long as an application exposes an MCP server, your agent can query it, automate tasks, and chain actions together with ease.

At PerryLabs, we’re going a step further. We’re building custom MCP servers that connect to a company’s ERP or internal APIs, so agents can pull live deal data from HubSpot, update notes and tasks from a conversation, or generate a report and submit it through your business’s proprietary platform. It’s not just automation. It’s intelligent orchestration across systems that weren’t designed to talk to each other.

What’s wild is that this won’t always require a prompt or a conversation. Agentic AI means the agent just knows what to do next. You won’t ask it to resize 10,000 images—it will do that on its own. You’ll get the final folder back, with backgrounds removed, perfectly cropped, and brand elements adjusted—things we once assumed only humans could handle.

MCP makes that future real. As the protocol matures, the power of agentic AI will only grow.

If you’re interested in testing out how MCP can help you build smarter agents or want to start embedding MCP layers into your applications, reach out. We’d love to show you what’s possible.

-

🧠 Finding a job sucks and it’s turning into AI warfare

Like many of you, I’ve got friends on both sides of the job battle. Recruiters and hiring managers are getting flooded with more resumes than ever. And let’s be honest, no one has time to manually comb through thousands of applications while also doing their other job. So hiring teams turn to AI tools to help screen.

On the other side, job seekers are exhausted. You spend hours tailoring your resume, researching the company, writing a thoughtful cover letter only to send it into the void. No response. No feedback. Not even a polite rejection. It’s soul-crushing.

AI was bound to enter the picture, but now it’s become a battleground. Applicants use AI to apply faster and look better. Hiring teams respond by using more AI to filter even harder. The result? Everyone’s stuck. It’s time for a better approach. Resumes alone won’t cut it anymore. I think AI should help interview, not just screen. Conversational tools, avatars, first-round screeners, anything that gives more people an honest at-bat. The system’s already broken. Doing the same thing over and over is just automation-driven insanity.

-

🧠 Is Apple About to Buy an Answer Engine?

What do you do when $20 billion in revenue might vanish thanks to Google’s looming antitrust fallout?

You buy the best damn answer engine on the block.

Perplexity is already my favorite AI search tool—fast, smart, and actually useful. Imagine it embedded deep into Apple’s many operating systems. A real-time answer engine that could make Siri useful and launch a day-one Google Search competitor. If this happens, it might be Apple’s smartest acquisition in years.

-

🧠 Teaching in an AI World

In my talks with professors at local colleges and universities, I keep hearing the same thing. We’re teaching for a world that’s changing faster than we can update our syllabi.

The scale of these AI tools is mind-blowing. But here’s the catch: subject matter experts, people who truly get it, are the ones who benefit most. When you lack that core understanding, the tool becomes a crutch, and the power dynamic shifts. Instead of the human leading, the tool leads.

I see this all the time with new developers and junior engineers. Many lean on these tools like a lifeline, while the more experienced folks use them to amplify what they already know.

The Jetsons often asked this question in a way only they could, with jokes like George mashing potatoes and calling it slavery before pressing a button to have a robot do it for him.

In the linked blog post, “The Myth of Automated Learning,” the author lays it out clearly:

Thanks to human-factors researchers and the mountain of evidence they’ve compiled on the consequences of automation for workers, we know that one of three things happens when people use a machine to automate a task they would otherwise have done themselves:

- Their skill in the activity grows.

- Their skill in the activity atrophies.

- Their skill in the activity never develops.

Which scenario plays out hinges on the level of mastery a person brings to the job. If a worker has already mastered the activity being automated, the machine can become an aid to further skill development. It takes over a routine but time-consuming task, allowing the person to tackle and master harder challenges. In the hands of an experienced mathematician, for instance, a slide rule or a calculator becomes an intelligence amplifier.

Of course, the bigger question is how much of this is about the present and how much it will matter in the future. Most of us wouldn’t survive if we had to hunt and gather our own food or live without modern conveniences. Maybe some foundational knowledge just won’t be as important tomorrow as it is today. Could programming become a dying art form like calligraphy?

At the heart of all this is the question of what’s actually worth teaching in a world where AI handles the heavy lifting.

-

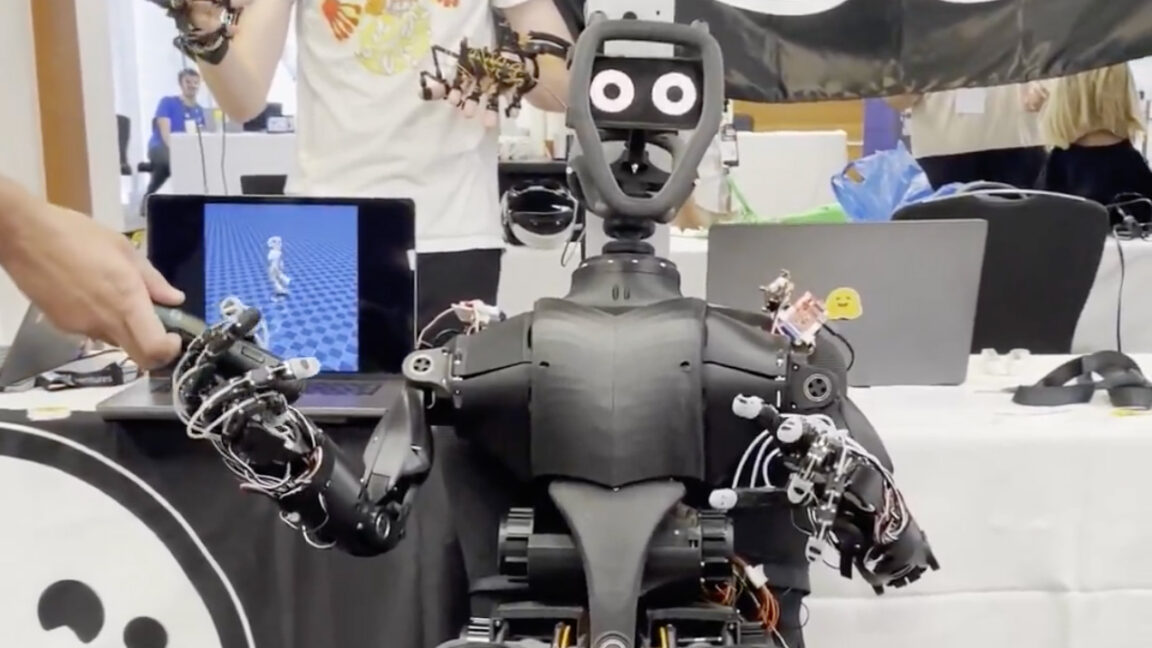

🧠 AI Is Helping Robotics Move Faster

I can’t wait to get my hands on a copy of the new dev kit from Hugging Face. But what’s most striking here is how AI is finally bridging the gap to bring general-purpose robotics to life.

It’s easy to miss the investment, but under the hood, every major AI developer is quietly figuring out how to teach models not just to understand our world but to interact with it. That means moving from generating text or images to transforming what they “see” or “understand” into actions, like a robotic arm that can pick up a box or a humanoid that can fold your laundry.

As AI-powered robotics becomes more common, it’s easy to imagine a workplace where robots are as ubiquitous as laptops. Just like a human, you can give them a prompt or an instruction set, or have them watch you do a task once, and they’ll repeat it effortlessly, at a cost humans simply can’t match. These systems can work 24/7, needing only electricity to keep them moving.

The doors that AI opens here are tremendous, and much closer than you might think

-

🧠 Vibe Coding A Security Risk?

Vibe coding. Vibe marketing. Vibe everything.

It’s not just a fad, it’s a transformation. We’re talking about a 100x boost in individual capability, but here’s the kicker: subject matter expertise still matters. This article about Lovable, on of the hottest new vibe coding startup, makes that crystal clear.

In development, simple mistakes like where you store your API keys or how you filter input can make or break your security. It’s common sense to most developers that these steps are essential to writing secure code, but at least today, tools like Lovable or Windsurf gloss over this, leaving a production code base open to attack.

I’ve noticed the same thing when working with other AI tools or writing prompts, you have to be explicit about writing code securely. The vibe can be great and scale human potential by 100x, but until we build in the guardrails, subject matter knowledge will be ireplacable.

-

A Week of Dueling AI Keynotes

Microsoft Build. Google I/O. One week, two keynotes, and a surprise plot twist from OpenAI. I flew to Seattle for Build, but the week quickly became about something bigger than just tool demos; it was a moment that clarified how fast the landscape is moving and how much is on the line.

For Microsoft, the mood behind the scenes is… complicated. Their internal AI division hasn’t had the impact some expected. And the OpenAI partnership—the crown jewel of their AI strategy—feels increasingly uneasy. OpenAI has gone from sidekick to wildcard. Faster releases, bolder moves, and a growing sense that Microsoft is no longer in the driver’s seat.

Google has its own tension. It still prints money through ads, but it just lost two major antitrust cases and is deep in the remedies stage, which could change the company forever. Meanwhile, the company is trying to reinvent itself around AI, even at its core business model (search + ads) starts to look shaky in a world where answers come from chat, not clicks.

Let’s start with Microsoft

The Build keynote focused squarely on developers and, more specifically, how AI can make them exponentially more powerful. This idea—AI as a multiplier for small, agile teams—is core to how I think about Vibe Teams. It’s not about replacing engineers. It’s about amplifying them. And this year, Microsoft leaned in hard.

One of the most exciting announcements was GitHub Copilot Agents. If you’ve played with tools like Claude Code or Lovable, you know how quickly AI is changing the way we write software. We’re moving from line-by-line coding to spec-driven development, where you define what the system should do, and agentic AI figures out how.

Copilot Agents takes that further. You can now assign an issue or bug ticket in GitHub to an AI agent. That agent will create a new branch, tackle the task, and submit a pull request when it’s done. You review the PR, suggest edits if needed, and decide whether to merge. No risk to your main codebase. No rogue commits. Just a smart collaborator who knows the rules of the repo.

This isn’t just task automation—it’s the blueprint for how teams might work moving forward. Imagine a lead engineer writing specs and reviewing pull requests—not typing out every line of code but conducting an orchestra of agentic contributors. These agents aren’t sidekicks. They’re teammates. And they don’t need coffee breaks.

Sam Altman joined Satya Nadella remotely – another telling sign that their relationship is collaborative but increasingly arms-length. Satya reiterated Microsoft’s long view, and Sam echoed something I’ve said for a while now: “Today’s AI is the worst AI you’ll ever use.” That’s both a promise and a warning.

The next wave of announcements went deeper into the Microsoft stack. Copilot is being deeply embedded into Microsoft 365, supported by a new set of Copilot APIs and an Agent Toolkit. The goal? Create a marketplace of plug-and-play tools that expand what Copilot Studio agents can access. It’s not just about making Teams smarter – it’s about turning every Microsoft app into an environment agents can operate inside and build upon.

Microsoft also announced Copilot Tuning inside Copilot Studio – a major upgrade that lets companies bring in their own data, refine agent behavior, and customize AI tools for specific use cases. But the catch? These benefits are mostly for companies that are all-in on Microsoft. If your team uses Google Workspace or a bunch of best-in-breed tools, the ecosystem friction shows.

Azure AI Studio is also broadening its model support. While OpenAI remains the centerpiece, Microsoft is hedging its bets. They’re now adding support for LLaMA, HuggingFace, GrokX, and more. Azure is being positioned as the neutral ground—a place where you can bring your model and plug it into the Microsoft stack.

Now for the real standout: MCP.

The Model Context Protocol—originally developed by Anthropic—is the breakout standard of the year. It’s like USB-C for AI. A simple, universal way for agents to talk to tools, APIs, and even hardware. Microsoft is embedding MCP into Windows itself, turning the OS into an agent-aware system. Any app that registers with the Windows MCP registry becomes discoverable. An agent can see what’s installed, what actions are possible, and trigger tasks, from launching a design in Figma to removing a background in Paint.

This is more than RPA 2.0. It’s infrastructure for agentic computing.

Microsoft also showed how this works with local development. With tools like Ollama and Windows Foundry, you can run local models, expose them to actions using MCP, and allow agents to reason in real-time. It’s a huge shift—one that positions Windows as an ideal foundation for building agentic applications for business.

The implication is clear: Microsoft wants to be the default environment for agent-enabled workflows. Not by owning every model, but by owning the operating system they live inside.

Build 2025 made one thing obvious: vibe coding is here to stay. And Microsoft is betting on developers, not just to keep pace with AI, but to define what working with AI looks like next.

Now Google

Where Build was developer-focused, Google I/O spoke to many audiences, sometimes pitching directly to end-users and sometimes to developers. Google I/O pushed to give a peek at what an AI-powered future could look like inside the Google ecosystem. It was a broader, flashier stage, but still packed with signals about where they’re headed.

The show opened with cinematic flair: a vignette generated entirely by Flow, the new AI-powered video tool built on top of Veo 3. But this wasn’t just a demo of visual generation. Flow pairs Veo 3’s video modeling with native audio capabilities, meaning it can generate voiceovers, sound effects, and ambient noise, all with AI. And more importantly, it understands film language. Want a dolly zoom? A smash cut? A wide establishing shot with emotional music? If you can say it, Flow can probably generate it.

But Google’s bigger focus was context and utility.

Gemini 2.5 was the headliner, a major upgrade to Google’s flagship model, now positioned as their most advanced to date. This version is multimodal, supports longer context windows, and powers the majority of what was shown across demos and product launches. Google made it clear: Gemini 2.5 isn’t just powering experiments—it’s now the model behind Gmail, Docs, Calendar, Drive, and Android.

Gemini 2.5 and the new Google AI Studio offer a powerful development stack that rivals GitHub Copilot and Lovable. Developers can use prompts, code, and multi-modal inputs to build apps, with native support for MCP, enabling seamless interactions with third-party tools and services. This makes AI Studio a serious contender for building real-world, agentic software inside the Google ecosystem.

Google confirmed full MCP support in the Gemini SDK, aligning with Microsoft’s adoption and accelerating momentum behind the protocol. With both tech giants backing it, MCP is well on its way to becoming the USB-C of the agentic era.

And then there’s search.

Google is quietly testing an AI-first search experience that looks a lot like Perplexity – summarized answers, contextual follow-ups, and real-time data. But it’s not the default yet. That hesitation is telling: Google still makes most of its revenue from traditional search-based ads. They’re dipping their toes into disruption while trying not to tip the boat. That said, their advantage—access to deep, real-time data from Maps, Shopping, Flights, and more—is hard to match.

Project Astra offered one of the most compelling demos of the week. It’s Google’s vision for what an AI assistant can truly become – voice-native, video-aware, memory-enabled. In the clip, an agent helps someone repair a bike, look up receipts in Gmail, make phone calls unassisted to check inventory at a store, reads instructions from PDFs, and even pauses naturally when interrupted. Was it real? Hard to say. But Google claims the same underlying tech will power upcoming features in Android and Gemini apps. Their goal is to graduate features from Astra as they evolve from showcase to shippable, moving beyond demos into the day-to-day.

Gemini Robotics hinted at what’s next, training AI to understand physical environments, manipulate objects, and act in the real world. It’s early, but it’s a step toward embodied robotic agents.

And then came Google’s XR glasses.

Not just the long-rumored VR headset with Samsung, but a surprise reveal: lightweight glasses built with Warby Parker. These aren’t just a reboot of Google Glass. They feature a heads-up display, live translation, and deep Gemini integration. That display can able to silently serve up directions, messages, or contextual cues, pushing them beyond Meta’s Ray-Bans, which remain audio-only. These are ambient, spatial, and persistent. You wear them, and the assistant moves with you.

Between Apple’s Vision Pro, Meta’s Orion prototypes, and now Google XR, one thing is clear: we’re heading into a post-keyboard world. The next interface isn’t a screen, it’s an environment. And Google’s betting that Gemini, which they say now leads the field in model performance, will be the AI to power it all.

And XR glasses seem like a perfect time for Sam Altman to steal the show…

OpenAI and IO sitting in a tree…

Just as Microsoft and Google finished their keynotes, Sam Altman and Jony Ive dropped the week’s final curveball: OpenAI has acquired Ive’s AI hardware-focused startup, IO, for a reported $6.5 billion.

There were no specs, no images, and no product name. Just a vision. Altman said he took home a prototype, and it was enough to convince him this was the next step. ‘I’ve described the device as something designed to “fix the faults of the iPhone,” less screen time, more ambient interaction. Rumors suggest it’s screenless, portable, and part of a family of devices built around voice, presence, and smart coordination.

In a week filled with agents, protocols, and assistant upgrades, the IO announcement begs the question:

What is the future of computing? Are Apple, Google, Meta, and so many other companies right to bet on glasses?

And if it’s not glasses, not headsets, not wearables, we’ve already seen—but something entirely new. What might the new interface to computing look like?

And with Ive on board, design won’t be an afterthought. This won’t be a dev kit in a clamshell. It’ll be beautiful. Personal. Probably weird in all the right ways.

So where does that leave us?

AI isn’t just getting smarter—it’s getting physical.

Agents are learning to talk to software through MCP. Assistants are learning your context across calendars, emails, and docs. Models are learning to see and act in the world around them. And now hardware is joining the party.

We’re entering an era where the tools won’t just be on your desktop—they’ll surround you. Support you. Sometimes, speak before you do. That’s exciting. It’s also unsettling. Because as much as this future feels inevitable, it’s still up for grabs.

The question isn’t whether agentic AI is coming. It’s who you’ll trust to build the agent that stands beside you.

Next up: WWDC on June 10. Apple has some catching up to do. And then re:Invent later this year.

-

🧠 AI is sprinting, and this week’s pace was dizzying!

At Microsoft Build and Google I/O, we saw a flood of dev-focused announcements, new models, better tooling, and smarter assistants. OpenAI shook things up with its surprise acquisition of IO, the design-forward startup from Jony Ive that had already picked up Wind Surf and its “vibe coding” platform.

But Anthropic quietly dropped what might be the most impressive update of the week: Claude 4. Analysts are calling it one of the best coding models released to date. And here’s where things get really interesting: rumor has it Apple is prepping a Claude 4 integration directly into Xcode. WWDC is around the corner, and if true, that could mark a major shift in how Apple plans to close the AI gap.

Every player is pushing forward. The race isn’t just about general intelligence anymore – it’s about who can make AI feel seamless, useful, and built-in for developers.