Issue #40: Revisiting Apple Vision Pro

Howdy👋🏾, as I move into my second month with the Apple Vision Pro, I wanted to revisit my review and share some additional thoughts. I still love the device, but I must admit that I don’t use it on a daily basis. However, over the past two months, I’ve demonstrated the app to over 50 people. Mindgrub even hosted an event to showcase the power of mixed reality and offer demos to business leaders throughout the DMV area.

I’ve also had the opportunity to use the device in many settings, such as on a plane, on an Amtrak train to Philadelphia, and at several restaurants around town. These experiences have given me a good understanding of Apple Vision Pro’s pros and cons.

The Fit

I love the look and feel of the woven single-band strap, but after switching to the dual loop, I discovered that many of my fit issues are due to the device’s weight, causing it to slip down my face slowly. In my original review, I mentioned how hard it was to drink a glass of water, but with the dual loop, I feel comfortable drinking a hot cup of coffee with no issues. I also found the device less blurry and more comfortable to wear. While the solo loop is iconic and beautiful, I recommend ditching it and embracing the dual loop.

The tighter and better fit fixed many things, but I still get unhelpful warning messages about how and where the device sits on my head. If I use it while eating, it’s normal to get a warning that an object is too close to my head – yeah, I know, I see it. I also often get warnings to move the Apple Vision Pro up, left, right, or down on my face, but even after adjusting, much seems the same.

I wore contacts for my first month with Apple Vision Pro, but recently, my Zeiss lenses arrived. These lenses magnetically snap over the device’s lenses. The snap feels a bit magical, and they stay put when I lug the device around with no issues.

To pair the new lenses, you snap them in and then look at a QR code that provides the Apple Vision Pro with your prescription. For about 30 minutes, I struggled to pair them and almost gave up, but after a quick call to Apple technical support (they were super helpful – thank you!), we discovered that my room needed to be better lit for the cameras to scan the QR code.

Keep in mind that I was in a room I consider to be adequately well-lit, with a large window to my left. The QR code was clearly visible through the video feed, and I could easily read a magazine in the lighting. Once I turned on two additional lamps, the device scanned the code and paired the lenses with no issues, allowing me to see clearly and use apps in the interface.

The Zeiss lenses have been excellent! I’m now considering buying them for my Meta Quest. They make it easier to pick up the device casually in the mornings or evenings, and since they’ve arrived, I’ve reached for the device more often. My one complaint is that it’s sometimes so easy that I leave my glasses in another room, which has inadvertently taught me the limits of pass-through video. For example, walking up the steps of my rowhome at night with the lights off can produce a pitch-black video where I would normally see the steps in front of me – but more on this later.

Another pet peeve is that Apple Vision Pro struggles when you lie down. One evening, I laid back on the sofa with plans to watch Napoleon on Apple TV, and the device seemed to refuse to accept the position.

Yes, it’s heavy in terms of weight, but the dual loop makes it easier for me to manage long sessions using the device. I still need to shift the device up to scratch an itch or wipe under an eye here or there, but that has become a normal thing in any VR headset.

The Interface

Gaze and pinch should be amazing, but the longer I use it, the more I find that it often misses the mark. It seems magical on first use, but as you continue to use it, you realize that your focus area is only sometimes where you want an action to happen. The best place to see this is when typing. While my eyes are focused on the text field, I’m typing in – say, the search box on Amazon or Google – and not the actual keyboard. This means that pinching to tap a key won’t work until I redirect my focus to each letter I want to type. It’s frustrating.

Frequently, when using the web or apps with small focus areas, you find yourself trying intently to look at a button, hoping to see what you want selected, but for whatever reason, the headset thinks you’re looking at something else. I have also discovered that it is sometimes hard to maintain focus on what I think I’m looking at by the time I tap my fingers together – something that has caused me to accidentally do so many things that I have developed a bit of “tap fear” when presented with tasks that feel undoable. In demos, this has led to people accidentally deleting apps or confirming a dialog they meant to cancel.

One of the worst incidents I remember is accidentally turning off my prescription lenses in the preferences menu when I meant to close the window. This caused eye tracking to stop working; thankfully, removing and snapping my lenses back in fixed the issue without me needing to put my contacts in to turn the settings back on.

Many of these issues disappear when you pair the Apple Vision Pro with a keyboard or trackpad (it does not support a mouse), which gives you the precision missing from the eyesight feature. I can’t stress enough how much better of a device Apple Vision Pro is with an external keyboard and trackpad, combined with glaze and pinch. It’s a perfect combo. I like it so much that I recently purchased a $35 portable Bluetooth keyboard and trackpad that I can bring on the road or whip out in meetings – sadly, the keyboard’s trackpad does not work because Apple Vision Pro thinks it is a mouse… sigh. I’ll keep trying these out until I find one that works.

The last thing on the interface is that notifications are still a pain. They float, covering whatever you are focusing on (maybe this “you are focusing on”), and they sit for what seems like forever. It’s annoying, and I hope a future version of VisionOS figures out a new way to handle them.

The Darkness

Apple Vision Pro needs a lot of light. For the device to anchor windows to objects, spatial computing needs to know the space, which requires light. This sounds like a small issue, but the more I use the device, the more it becomes a major problem. For example, if I’m watching a movie in my bedroom or outside on my deck, it becomes challenging as the space increasingly becomes darker. Annoyingly, in the middle of a movie or while using an app, the device will suddenly declare that tracking has failed and hide everything in front of me without pausing the content. Dismissing the warning sometimes brings the screens back, but other times, they disappear, requiring you to double-click the digital crown to bring them back. In other instances, they reappear anchored or positioned in weird or unexpected ways.

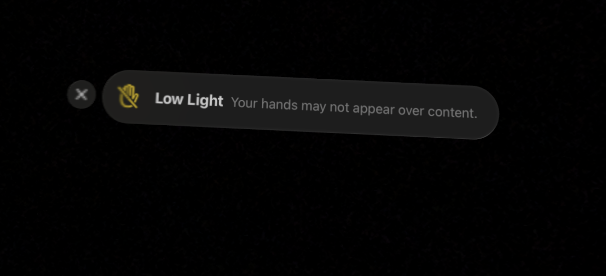

In low-light situations, Apple Vision Pro will warn you that its tracking is diminished, and the normally excellent anchoring can fail. Worse, the main way you select with the device, pinching, won’t work if the cameras can’t pick up your hands.

What’s tough is understanding when the room is too dark. My house is largely lit by its large windows, so as it gets darker outside, so does my house. During long work sessions, as the sun sets, Apple Vision Pro will become increasingly unstable to me in what feels like reasonably lit conditions. If I want to use the device to watch a movie late at night or after my partner sleeps, it’s nearly impossible without having a bright light source in the room, which is a non-starter.

I imagine I can disable tracking in the settings as I would on a plane or train to allow Apple Vision Pro to position items without anchoring and tracking when a vehicle is moving fast or when light is insufficient, but that seems like something I shouldn’t have to do because the sun went down.

The Apps

Apple Vision Pro feels like an iPad when I want a Mac. As a device, I love it for productivity, but the reality is that the interface should be built for a multi-tasking work machine. Yes, I can surround myself with apps, something that was amazing on day one – but in practical use, I found myself missing the layered windowing interface of a computer. For example, while watching a cooking show, I decided to search for a new set of mixing bowls, but moving my focus to the web browser made the video window semi-translucent, letting in light or faintly showing the object behind it. If those two windows are stacked, the more prominent window also causes a weird feathering that hides large chunks of the video, making it hard to really do two things at once.

If you try to avoid that layering, it becomes difficult to position apps so you can keep them all in a single view. The issue is that while Apple Vision Pro gives you the biggest canvas you can have, it wants you to run apps like an iPad as a modal interface where only one thing has your attention. Of course, you can surround yourself with apps, but your viewing area is fairly limited if you’re seated at a desk or table. This is especially hard if you’re using an external keyboard and mouse if the app you’re typing input into is behind you.

Unsurprisingly, the ability to connect to my Mac virtually is one of my absolute favorite features and the main way I use the device. The Mac truly shines on Apple Vision Pro, giving you a huge screen with the multitasking and layered interface I wish I could get natively. I have my gripes, like wishing for more displays or the ability to change the screen aspect ratio, but the screen is sufficiently large to make those nonissues.

Weirdly, this is also my biggest complaint. On the road or when bringing Apple Vision Pro to a conference or event, I want to have those same abilities as my MacBook from the device itself without needing to bring it with me. It seems crazy to sit at a conference, on a plane, or on a train with a laptop with its screen dark solely to access it virtually, especially when I know that Apple Vision Pro has the same computing power as my M2 Macbook Air.

Apple Vision Pro has tons of Apps. After all, you can use an iOS and iPad app, but those apps struggle to work with eyesight and the glance and tap mechanics. Most often, the issue is that an app wants a touch gesture you can’t mimic, or the interface is so dense you can’t easily focus on small icons with your eyesight.

Some of these are easy fixes—at Mindgrub, we’ve discovered that we can make an app experience much better on Apple Vision Pro in just a few hours. Yet, those apps can still feel like iPad apps. I think the biggest surprise for me was the lack of imagination. A few apps tap into the power of AR and VR to use the device’s capabilities. Maybe this will change, but I hope we will see more immersive experiences or apps pushing the boundaries of Apple’s amazing ARKit.

The web offers a new range of possibilities I can’t wait to explore with more clients. My favorite is the ability to link to 3D objects and pull those objects into your own space. Sites like Ikea and Apple have long been linked to 3D reference images of products you can see using augmented reality on your phone or tablet. However, paired with Apple Vision Pro, these experiences become a wonder.

On Ikea’s website, I can decorate a room with furniture and place and size objects in a real space where they stay anchored to reality. The possibilities, feeling as if you are in the seat of a car, looking at how a lamp might sit on a nightstand, or virtually trying on a new watch, could be amazing.

Apple has been slow to embrace WebXR, which allows even more possibilities. However, you can turn these on in Apple Vision Pro’s settings, allowing you to move into virtual worlds on websites or interact with 3D objects on the web in amazing ways. Of course, none of this is new for Meta Quest users, but it’s nice to see these possibilities continue to emerge.

The Ecosystem

As a long-time Apple user, I’m surrounded by the large ivory walls of their ecosystem, and I’ve come to see it as one of the biggest benefits of buying an Apple device. Apple Vision Pro does not fail here. If you use Apple’s AirPods, they just connect. If your house has an Apple TV or other Airplay device, you can easily stream the output of Apple Vision Pro to them with no issues. If you want to connect to a Mac using the same Wi-Fi, look at it and tap a virtual connect button over it. It’s amazing and very magical.

At the same time, some things I imagined Apple to have solved are missing. Let’s start with the iPhone’s Face ID, which does not work while wearing Apple Vision Pro. This is annoying, and as someone who tries to keep security in mind, I feel that typing in a passcode with a device with a camera that can easily record or stream your vision live feels like a huge oversight. Other issues include Bluetooth devices paired to both a laptop and the Apple Vision Pro, which need help with which device to pair with – especially when you’re using that laptop with Apple Vision Pro.

I also wonder what could be possible – like displaying the contents of my iPhone or iPad on the Vision Pro through the device, similar to what it does for my Mac, versus looking at these screens in a passthrough video.

The Conclusion

The big question is: buy, keep, or return. Apple Vision Pro is the definition of a version one product. It is unquestionably one of the best mixed-reality headsets I’ve ever used, with displays that transport you to another world in a way no other device has ever been able to.

I view the Apple Vision Pro much like my experience with the now-named Series 0 Apple Watch. The company launched with some assumptions, and it took time for the software to adapt to understand how people really used it and to transform the device into the one we always wanted. I like Apple Vision Pro, but I think many of these very fixable issues will slowly move the device into the “love” category – but it will take time. I plan on keeping them, and I can only imagine what version 2 of VisionOS, the operating system that runs these things, will look like in this year or next year’s WWDC.

If you’re interested, definitely demo Apple Vision Pro. Don’t purchase yet. Wait at least 1-3 years; many of these issues will get worked out, and Apple Vision Pro will become as polished as the iPhone. In 2 years, what once was strange will become as normal as someone walking around with Airpods. Just wait. Now, onto my thoughts on tech & things:

⚡️ Several months ago, I mentioned fear of the EV Rental Surprise. While visiting New Orleans for Mardi Gras, I was surprised when presented with the choice of a Tesla Model 3 or waiting until the next day. As an EV owner who charges mainly at home, I immediately thought, “Where am I going to charge this thing?” Luckily, with a 100% charge, we had enough juice to get us through the entire trip, but that didn’t change the range anxiety and fear that if we needed it, charging would require us to drive 20-30 minutes to locate a charger.

I love my EV and think it’s the obvious future, but the reality is that the charging infrastructure is just not ready. That makes me unsurprised to see Hertz’s CEO out after betting big on EVs, only to find customer demand not there. We will get there, but we need to invest much more in charging infrastructure before the everyday person can comfortably make the switch.

⚡️ Apple’s making a ton of AI news. Tim Cook continues to tease that this year will bring deeper AI integration to iOS. They recently snapped up a Canadian AI company known for helping compress large LLM models to run on hardware. Apple’s research team also released a study on a new multi-model AI tool. And the cherry on top is a rumor that Apple is negotiating with Google and OpenAI to leverage their models, sealed with a photo of Tim Cook and Google’s CEO Sundar Pichai dining in a restaurant. I have high hopes for Apple’s announcements at WWDC this summer, and I guessed at the start of the year that they would take a privacy-minded approach with a multi-model solution that did as much work as it could locally on Apple’s hardware while still reaching out to cloud LLMs to help with more complex queries. That’s how I would approach it, and these moves seem to reflect just that. Also, let’s not forget that while the world depends on Nvidia GPUs, pushing the market cap into the trillions, Apple makes its own silicon with integrated GPU chips and has the supply chain and chip knowledge to make a hell of an AI-powered chip.

I felt inspired to update my review after reading this blog post from Hugo Barra, a former Meta employee who shared a very in-depth view of his thoughts on Apple Vision Pro. I have to say, I expected to disagree with him and Mark Zuckerberg, but in the end, I found his opinions to be spot on. It’s definitely worth the read.

While we’re talking about Meta, I was excited to trade in my pair of Warby Parkers for the new Meta Ray-Ban lenses, but sadly, Ray-Ban does not support my prescriptions. I’m sure I could buy them and take them elsewhere to get lenses inserted, but I haven’t had the time to do the legwork to make all that work. I would love to try them and even make them my daily wear, but for now, I’m stuck with what I have. The reviews have been amazing, and they sound like they keep getting better and better with each software release.

⚒️ One more thing—I’m rolling out AI workshops, starting this week with one virtual and a second held in person for a few notable companies. I’m always happy to come to you and deliver tailored sessions privately at your organization, whether it’s through interactive workshops, conferences, panels, or other events. Feel Free to get in touch. Additionally, you can explore my growing list of workshops and upcoming sessions on my site.

-jason

p.s. I caught wind of this on John Gruber’s Daring Fireball – but man, are these amazing and hilarious! How did I miss this? Apparently, 20 years ago in Chile, episodes of Star Wars aired with ads for Cerveza Cristal beer inserted, so they felt like part of the movie.