Author: Jason Michael Perry

-

Screen Scraping

Gizmodo has a piece on “Google Says It’ll Scrape Everything you Post Online for AI“:

One of the less obvious complications of the post ChatGPT world is the question of where data-hungry chatbots sourced their information. Companies including Google and OpenAI scraped vast portions of the internet to fuel their robot habits. It’s not at all clear that this is legal, and the next few years will see the courts wrestle with copyright questions that would have seemed like science fiction a few years ago. In the meantime, the phenomenon already affects consumers in some unexpected ways.

Twitter’s crazy rate-limiting meltdown and Reddit’s push to charge for API access are about one thing, AI data models. These systems are hungry for data, and access to that data will be vital to building the best AI models. Unsurprisingly, Google is making it known that as it ranks and offers prime search engine placement, all that delicious data is free game to them. When APIs become closed, people result to screen scrapping, and screen scrapping ends with paywalls and Twitter style rate-limiting… Wonder how this all plays out.

-

Cord Cutting

From Variety’s “Cord-Cutting Hits All-Time High in Q1, as U.S. Pay-TV Subscriptions Fall to Lowest Levels Since 1992“

Cable TV operators’ rate of decline in Q1 reached -9.9% year over year, while satellite providers DirecTV and Dish Network fell -13.4%. In addition, so-called “virtual MVPDs” (multichannel video programming distributors) lost 264,000 customers in Q1, among the worst quarters to date for the segment.

“The picture is not one that suggests that a plateau in the rate of decline is coming any time soon,” Moffett wrote.

It is no surprise that I cut the cord years ago, but two things make me debate that decision every year, live sports and local TV news. I’m a transplant to Baltimore, so my team, the New Orleans Saints, is only available if I pay a ridiculous cost for the NFL’s Sunday package. As the team grew in national fame, I spent more but still could not watch big national games or occasional Baltimore Ravens vs. New Orleans Saints matches because of antiquated TV rights rules.

In New Orleans’s I know local TV news like the back of my hand, but as a cord cutter, I can’t tell you a thing of Baltimore’s local stations. Why is this when it seems clear that traditional TV has lost the war? Why do holdouts like the local news and sports continue to make it hard to take the leap?

In 2022, Forbes reported that “Streaming Viewership Surpasses Cable For First Time, Nielsen Says.”

While streaming will likely remain the dominant form of television consumption, Fuhrer told Forbes that broadcast and cable will likely “see some rebound” this fall, as college sports and the NFL season start up and attract a higher share of viewership to those mediums.

Outside of the NFL and college football, every other sport has embraced streaming and has worked to make watching games more accessible. American football is stuck with a problem, few companies are big enough to lay out the dough they require, and until then, football has dug in and embraced traditional TV.

TV news is different but similar. News is stuck with an unclear business model in streaming, but something that, while declining, still pays. CNN pushed hard to grow its online news business only to scale back drastically. Other broadcasters skirt the concept but struggle with reducing the size of their audience or being limited to building their distribution systems. So while traditional TV holds on for dear life to maximize profits and return shareholder value, these companies continue to miss the entire point.

In 2018 the Guardian reported that “Young people are not watching TV news, but they still want to know about the world,” and why wouldn’t we expect that when in 2012, the Holly Wood Reporter spoke of these issues in “Why Kids’ TV is Scrambling to Stay Afloat“.

Despite the effort, Nickelodeon and others increasingly compete with their own content on Netflix as their parent companies eagerly make rich licensing deals. In early May, Bernstein analyst Todd Juenger issued a report blaming “drastic declines” in ratings for kids networks in part on repeats of older episodes in homes that subscribe to the streaming and DVD service. The study was controversial because it was based only on data from homes that subscribe to TiVo, which is not necessarily typical of the broad universe of TV homes, but Juenger says “executives should think hard whether they want to sell this content to Netflix. The money looks good in the short term, but if you believe what the data says, as Netflix gets more subscribers and people who use it more get accustomed to it, the impact is going to grow.”

Local TV, cable news TV, and football are getting the profits today, but both are missing out on establishing a relationship with a digital-first generation they’ve chosen to ignore or force to meet them on their turf. As Netflix begins its cord-cutting tour, do not forget Netflix CEO Reed Hastings’ quote on who they compete with:

“We earn consumer screen time, both mobile and television, away from a very broad set of competitors,” the quarterly earnings statement read. “We compete with (and lose to) ‘Fortnite’ more than HBO.”

What will the next generation pick when deciding between Roblox, Fortnite, and the NFL? Guess we will find out.

-

Reddit and end of Open API’s

This sucks. Apollo has been my go-to Reddit reader, and I don’t want that to change, but come the end of the month, it’s happening if I like it or not.If you’re not in the loop on Reddit’s API drama, the TL;DR is Reddit moved from open and free APIs to a fee-based system that charges based on the number of API calls you make. If this sounds familiar, it’s because Twitter went down a similar path, and many other open platforms have decided to shut the doors to open API access. The argument for why? OpenAI and AI models are being trained on hordes of open Internet data and, of course, the possibility to eek some revenue out of all the folks hooked on Reddit’s content.

As you might imagine, that approach makes the cost to run something like Apollo unsustainable.

Is Reddit wrong? Apps built for platforms like Twitter and Reddit are like symbiotic bacteria, but one organism is much more dependent on the other. As a platform, Reddit is about user-generated content, and as with Twitter or LinkedIn, it makes us feel like investors or partners in this whole social sharing experiment. But let’s be honest. Revenue and control of the platform you own is what this is really about. If you’re not in control of the last mile, you can’t control how your consumers interact with you or it. You’re constantly limited in how you can advertise, how you personalize, and the ways you can use them to generate revenue.

Hey Reddit, when you fix the mobile and iPad apps, call Mindgrub. We make great mobile apps.

-

Open API’s

The idea of open APIs and access to platforms has become a surprisingly divisive thing. Like most stories in 2023, our story of APIs starts with Elon Musk and Twitter and the decision to shut down third-party app access.

Many, many, many folks were upset that Twitter would shut off access to TweetBot or Twitterific. These apps have been part of Twitter from the start and one inspired Twitter’s logo. To add insult to injury, this made us all collectively realize that Twitter’s mobile app is not great (call me, Mindgrub builds excellent apps). But, Twitter didn’t just band third-party apps – what it did instead is rate limit API calls and implement a new system to charge based on the amount of API calls per month. The price tag was so hefty that bit by bit folks said nope.

Unrelated to Twitter, OpenAI blew through the doors of technology like the Kool-Aid man. Whoever had AI on the 2023 Bingo card deserves all the money. The products like DALI-2 and ChatGPT continue to blow all of our socks off – but then the deep dark secrets of OpenAI and other AI platforms began to drip out.

These LLM (Large Language Model) systems need data, and when I say data, they need all the data. The more you can feed the dang thing, the better – it’s like Seymore, and it wants all the information. Some of this best information came from the most open of sources, places like Twitter, Reddit, and Stack Overflow. These platforms are unique in havings tons of experts who share their advice or answer questions in the most open forums.

Elon Musk and Twitter responded that this was why they needed to lock down APIs and tweets so that eager AI training models won’t try to consume this valuable training data without paying the troll toll. Reddit and other sources of these models followed, and now we find ourselves full circle.

Apollo, my preferred Reddit reader and the only reader with a pixel pet finds itself facing the same issue as Tweetbot and Twitteriffic, the costs for the APIs they need to use from Reddit cost too much.

I get it. I understand it. But sometimes I think of the founding of our great Internet and a time when information was free and people linked to link for love. I guess that was the Internet’s 70’s – and today is a differnet time, but I can’t help but wonder if the hordes of people training models on the open Internet might find the reigns getting pulled a little tighter. I also wonder if this just continues the trend of pay walls popping up everywhere.

-

Originality

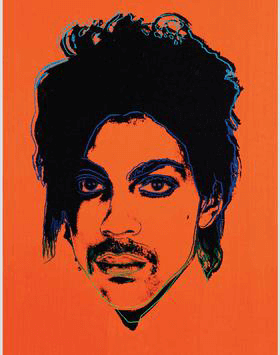

AI has moved the idea of derivative work into the headlines. After all, originality makes the greats great, or does it?

“Great Artist Steal” – Steve Jobs

I mean, think of all the original work we love—music, movies, tv, and art that genuinely shaped us.

Art is about the creation of something, and something sometimes comes from something. That something original becomes in itself a new art form.

The best part is sometimes a cover, a recreation of what we love and believe – but it also comes from sampling what we like and love but just the right taste.

But don’t go too far. A sample is good, but too much could be a copy.

But let’s not forget. Real artists steal, right? And art is about creating something not just new but from what we learn or acquire. I learned to write from reading and typing – Now is the time for all good men to come to the aid of your country.

I mean, when do we, as humans, get caught up with the past? Not us. Nostalgia never gets the best of us – but even if it did, we would make it better, and better, and better, and better, and better, and better, and better, and better, right?

So how dare AI copy all these original ideas we have!

I think we need to protect everything and keep the web or anyone from knowing things like a world-famous authentic New Orleans Red Beans recipe.

So is generative AI any different than our own derivative work? I mean, we can’t seem to figure that out – and sometimes, even some folks can’t find the fun in a little joke. Ideas come from so many people I know, so much I’ve read, and so much I want to know.

But maybe DALL-E and Open-AI find it kind of funny?

Originality is a really complex topic, and the impact of AI on jobs is scary. Shouldn’t we want the same inputs that allow us to create to be the things we feed the AI we use? Is that original work generated from the same tweets and arrives I read as derivative as my own?

In the end, we can all agree that copying can create some of the best art – or maybe we’re all just here for the ride.

-

Security Best Practices When Using AI to Write Code

At Mindgrub, the engineering team, like many, has found itself wondering just how good AI is at writing code. What do these things really know, and can they make us better, strong, and faster?

I’m here to tell you that it is pretty good, in some cases damn good. If you need a quick code snippet or find yourself wanting to convert existing code into a different language, tools like ChatGPT do a fantastic job. We’re also actively using and exploring tools like Github’s CoPilot – a development assistant that makes intelligence look antiquated. Our engineers are also investigating a metric ton of generative AI code tools like CodeWP for WordPress, AWS’s CodeWhisperer for, and X.

Generative code is impressive and quick, but is this code safe to run? After all, these AI tools open a brand new and wholly unexplored set of security concerns and unknown vectors for attacks by hackers. Most of these may not directly impact code security, but they point to the level of awareness we as technologists need to have as we explore the use of these platforms in our work lives.

Developers have already built AI tools that can brute force or perform login stuffing with an accuracy and speed that is impressive. All of this uses open-source tools like PassGAN, tools that are getting better every day. Some researchers have gotten clever and jailbroken or avoided AI safeguards to trick systems into writing code using known exploits or to write code that is used for nefarious reasons such as a DoS (Denial of Service attack). Others are creating advanced phishing systems that create highly personalized messages making truth and reality increasingly hard to differentiate.

Can we trust these types of tools to our most junior engineers or non-engineers to create code for production? Do we have a choice? We all know the reality is this is already happening and only going to increase. What we really need is to find ways to keep our AI-assisted code safe and secure.

So to help, I will first explore using a handful of these tools and give some hints along the way. I will also offer suggestions on what to look for and ways to keep the most generative code secure.

Github CoPilot

Many in my age range have joked about CoPilot being the replacement for Clippy, but both are assistants. However, CoPilot, unlike Clippy, is an assistant powered by OpenAI’s GPT artificial intelligence and trained on bajillions of code hosted by Microsoft’s GitHub. These days Github is the apparent elephant for public and private code repositories. It is also home to an incredible amount of open-source projects. If schools are code repositories, GitHub is Xavier’s school for the gifted or Hogwarts, without the riff-raff.

CoPilot integrates into many popular IDEs, such as IntelliJ and Visual Studio, to extend intelligence or auto-suggestion feedback. For most, it will feel like you’re getting a quick suggestion based on context – but these aren’t old-school suggestions. Often, you will find that CoPilot will have suggestions that are entire functions vs. finishing a line or two of code.

In this, CoPilot and tools like ChatGPT can be very different. CoPilot feels more like an assistant or peer programmer offering thoughts along the way. You pick and choose, but the architecture and direction of development are still very much you.

As a code generator, the results are mixed. In 2021 a DevSec engineer reviewing early results provided multiple examples of code that were prone to suggest code with several security issues. My experience is mixed. I’ve witnessed code snippets with SQL injection vulnerabilities or other minor problems. The more significant concern IMHO was not the quality of the code but the speed at which I accepted that the code would do what I anticipated.

OpenAI ChatGPT

OpenAI’s ChatGPT is what we now refer to as AGI or Artificial General Intelligence. For example, GitHub’s CoPilot has been primarily trained or concrete knowledge bases around programming and code giving it intelligence that is limited to a very particular realm. In short, it’s like a toddler who can tell you everything about Pokemon and nothing about the general makings of our world.

AGI makes writing code more of a hobby for ChatGPT but also gives it the ability to be a bit more creative in how it answers questions. It can add data from its general knowledge like we do to come to sometimes surprising conclusions.

ChatGPT, as a development tool, is an excellent starter. It excels at transforming example code into your preferred programming language. It can take code snippets and re-write them with additional features or adjustments. It also does a fantastic job of creating starter applications.

To test CoPilot, I asked it to generate the full login, logout, and sign-up logic for an application in JavaScript. I purposely omitted details to see if the AI would assume the need for a unique identifier like a username or email address. I also avoided mentioning the need to sanitize or encrypt arguments and SQL values like passwords.

Weirdly the code response differed. Each prompt could generate a wildly different answer. My first attempt at my prompt displayed an application that hard-coded the database password and showed a noticeable lack of validation. That request failed to complete as if the AI hit a point that it knew invalidated the previous response. By not updating my prompt and allowing it to regenerate, I got a much better response that essentially fixed issues without me editing or asking.

Like Github’s CoPilot, the results came back mixed, but most of my code from ChatGPT required a bit more knowledge and cleanup to run. For example, ChatGPT suggested a make file and a SQL script for my user database table but did not help me actually do the task. It was much more of an accelerator, requiring me to reimplement a lot of what it provided.

How do we keep it secure?

AI generative code tools are super accelerators. These tools are also trained on our own lousy code and suffer from the human mistakes we are all prone to. For junior engineers and non-engineers alike, these tools provide incredible power, but they will not mean the end result is better, more secure, or better quality.

So how do we keep them secure? We do what we should have (or what we are already doing).

First, let’s keep following the best practices of software development. If you have a team, make sure you enforce peer reviews and merge reviews. As the saying goes, measure twice and cut once – more eyes and especially those of a lead or senior engineer, will only make your code better.

Second, good unit tests and code coverage are some of the best checks a developer can put in place. Unit tests require the engineer to understand the expected results of the code they write and to verify that the code reacts as anticipated. By requiring larger code coverage, our engineers can use more generative code, but we can safeguard the upper and lower limits of these operations with these tests.

Liscense and dependency management can accidentally pop into code when using ChatGPT it’s not uncommon for it to recommend libraries and incorporate those libraries into a larger code base. For production code, this can unexpectedly force code to accept a GPL license, open, sourcing a chunk, or introduce vulnerabilities in an older library. These days we can add analyzers to our CI/CD pipeline that check and warn for these scenarios and reduce unexpected risks.

Other tools in the CI/CD pipeline also open the door can also safeguard against bad code quality:

• Lints and code syntax checks help maintain code conformity and check for common mistakes in a language. These same tools can scan for passwords checked inline to a code repository and reject code not in the company’s agreed-upon format.

• Many companies offer code security analyzers that look for the common mistakes and prevent developers from

• Static code testers scan the executable binary generated from an application for

If you still find yourself adament that your company or project is not ready for generative AI, you can also look into several tools that help detect AI-generated code. As a warning, this can be a bit of an arms race. As new AI tools improve, the detectors will take time to adapt and identify the latest version of GPT or CoPilot.

For many of our dev shops AI will introduce a new wild card in how we build things – but that wild card can be a great accelerator that increases productivity and helps make junior engineers bigger contributors to production projects. Embracing the unknown can be scary, but with the proper safeguards in place, we can create a secure environment where our teams can thrive.

-

A world without CarPlay

Whenever I rent a car, I have a straightforward request. Please support Apple CarPlay. It is single handily the most important thing for me in the next car I purchase or rent. That makes GM’s news to discontinue support for CarPlay and Android Auto on its future electric vehicles seem so crazy. I’m not the only person that really hates GM’s plan.

What’s tricky is the unenviable position GM, and most car manufacturers have found themselves. The computer that powers a car is increasingly more than an infotainment system. It has become the brain that powers many features, from adaptive cruise control to automatic parking. GMs move is to create an integrated system that couples map with extending a vehicle’s battery or warming it before charging. The car computer is the thing that will lead us to autonomous driving and put a pretty facade on it.

The problem war car companies face is not about vehicles but owning the relationship between us and all computing devices. The walled gardens around many of us keep getting taller and harder to separate. Today I move seamlessly from iPhone to iPad to Mac, and some of my data flows with me as I move from one to the other. What car manufacturers seem to forget is that the war is not for some of our attention but all of it. My phone is a device that has become my wallet, driver’s license, controls my house, opens my office door, knows my meetings, connects me with friends and family, and so much more. I no longer listen to FM, AM, or XM radio – I listen to Spotify, Apple Music, or podcasts. For many of us, our phone offers a ubiquitous ness that has transformed it into the closest thing we have ever had to a trustworthy digital assistant.

The next leap for cars is to build on that relationship. It’s a future where the car is nice and warm and ready for the morning commute. It is one where it effortlessly picks up on the morning news, podcast, or music and transitions it to the drive. Or one where the car and house share information to keep the temperature the same. This future requires deep knowledge of its user, and at present, Apple, Google, and Samsung are the exclusive holders of this data.

For GM, this is a hard place to live. Giving up the center console long-term places it on a path to be a commodity where the guts of the vehicle no longer come from the manufacturer. Worse, if Apple and Google directly compete and build a car – a place Google’s sister company Waymo already partially contends – it will be difficult, if not impossible, for them to differentiate.

The EV transformation masks many massive undercurrents facing the car industry. What is clear is this market will be very different in a decade. I bet the winners will have some version of Apple and Google’s platforms on the dashboard.

-

Is AI the end of Search Engine Optimization?

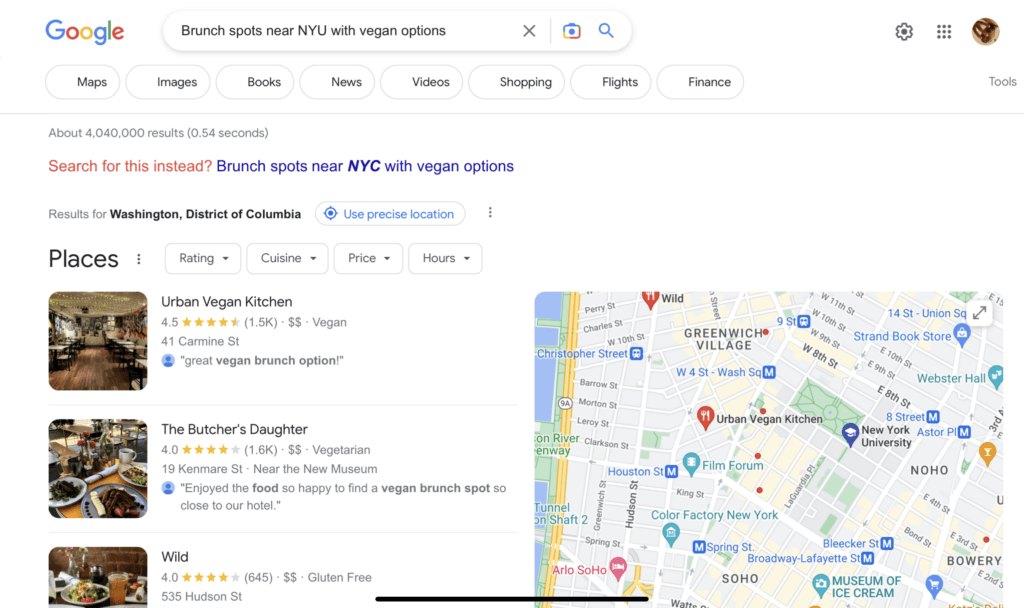

In testing the new crop of AI ChatBots, I noticed a consistent and fascinating trend, hunting for search results really sucks, and I want someone to tell me what I want. I want the AI bot to give me the answer. Not Bing or Google result pages or yelp listings. Tell me what I want to know. Here is a recent scenario: I’m heading to NYC to do college tours with my daughter and staying at a hotel in New York near NYU. The Sunday before, I plan to do brunch with two friends. One is a pescatarian, and the other is vegetarian – I eat practically anything and everything. We want the place to have a casual environment so we can hang out at a table or couch with drinks after and be within walking distance of my hotel.

I hit Google and manifested this as the search term “Brunch spots near NYU with vegan options.”

Google’s first set of results overlays some great-sounding places on a map. Now, I’m looking for locations or jumping at whatever name sounds great. The ratings are all 4+ stars or higher, so that’s not necessarily useful.

If I continue to scroll, I’m met with SEO (Search Engine Optimization) SPAM pages of the ten best lists that are AI-generated puff pieces with no actual human curation or Yelp’s search SEO spam pages that often have nothing relevant to my search.

Yelp when clicked shoes a search on the topic with the upper top 1/3 of the page full of ads and sponsored posts that are not relevant to the search. Sadly, Yelp did not have a list of places near NYU, so I was met with a list of restaurants in Manhattan.

I get a list of options in the remaining results – some good, some not. Genuine articles from actual New Yorkers, local bloggers, or local neighborhood papers are nonexistent.

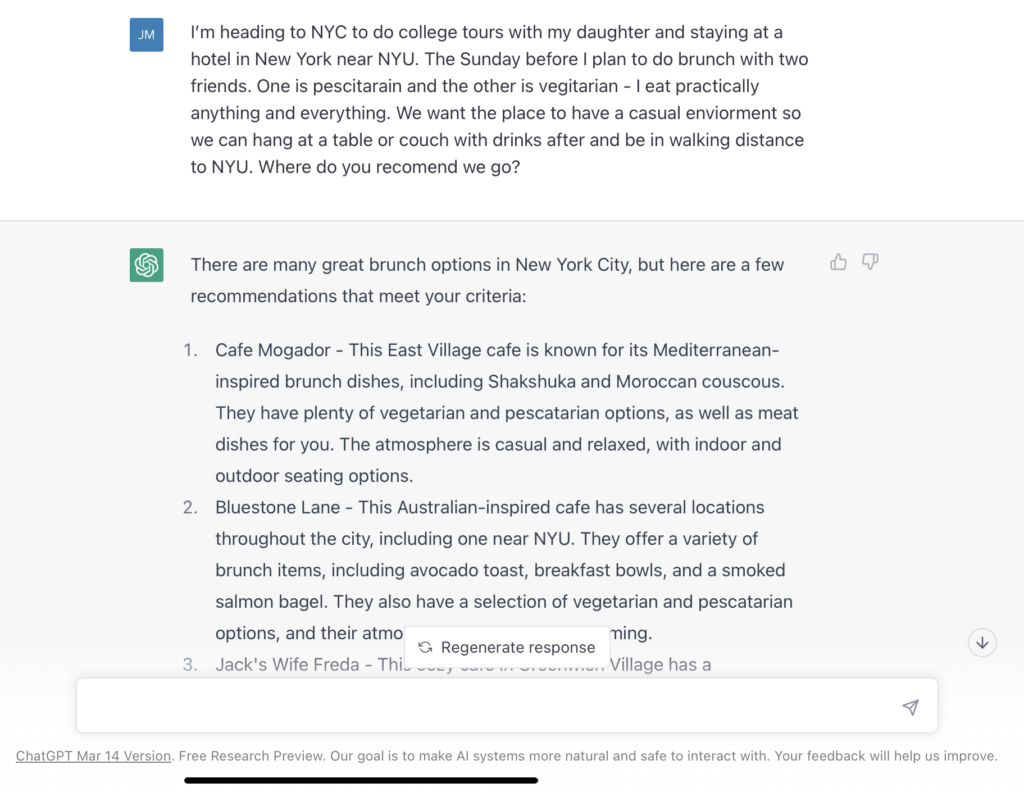

So after getting frustrated and feeling defeated, I decided to ask ChatGPT.

This is the full transcript:

There are many great brunch options in New York City, but here are a few recommendations that meet your criteria:

1 Cafe Mogador – This East Village cafe is known for its Mediterranean-inspired brunch dishes, including Shakshuka and Moroccan couscous. They have plenty of vegetarian and pescatarian options, as well as meat dishes for you. The atmosphere is casual and relaxed, with indoor and outdoor seating options.

2 Bluestone Lane – This Australian-inspired cafe has several locations throughout the city, including one near NYU. They offer a variety of brunch items, including avocado toast, breakfast bowls, and a smoked salmon bagel. They also have a selection of vegetarian and pescatarian options, and their atmosphere is cozy and welcoming.

3 Jack’s Wife Freda – This cozy cafe in Greenwich Village has a Mediterranean-inspired menu with plenty of vegetarian and pescatarian options. They’re known for their shakshuka and their grilled haloumi cheese sandwich. The atmosphere is casual and inviting, with indoor and outdoor seating.

All three of these options are within walking distance of NYU, have vegetarian and pescatarian options, and a casual atmosphere that’s perfect for hanging out after brunch.

From here, I chatted with ChatGPT and added additional thoughts and filters, like wanting a place that served mimosas and one with traditional American breakfast options. I picked a spot, made reservations, and was done.

The experience made me realize that hunting for search results and the growth of SEO SPAM has turned search into something that no longer produces results. Are the results from ChatGPT genuine? No, but it feels more authentic and direct to my request. It gave me an answer. This content may not tap into alternative New York magazines or the hip new blogger, but it feels definitive.

What is funny is that Google could have gotten here quicker. It did get here faster. Many protested the company’s urge to skip showing results and provide direct answers or snippets. If you remember, Google said you need a definition; why link to 15 different dictionary sites if you can answer the question? This generated tons of fear among small businesses and digital marketers as it quickly cut off a powerful funnel for leads. Worse was the fear that the logic for deciding this would be primarily money driven and hidden from the larger population.

Folks that is what ChatGPT does and exactly what we’re craving. Would you like Siri, Alexa, or Google Assistant to tell you where to go for dinner and not give you a list of 8 million possibilities? I would. Choice may not be what we are looking for after all. Right? Right… -

Is ChatGPT a Big Deal?

For the last few weeks (maybe months), OpenAI and several of its products have been in the press. If I knew nothing, this was the first time anyone had ever experienced AI or witnessed the immense amount of AI-based products we have consumed as customers for years.

It’s not as if demos have shown AI ordering food for us. It can’t look at an image and determine the type of plant it is. Can’t it translate words for us in real time? Can’t AI write articles or create music?

All of the things ChatGPT and recent AI innovations do are old news. We’ve been riding this wave since the video Humans Need Not Apply was recorded in 2014.

What has changed is access to this tech. Before OpenAI, using AI required complicated training models and hidden developer-centric tools. You needed product teams to attach APIs and write code. OpenAI is a reflection not of innovation but of what happens when the technology available to anyone becomes easy to access. OpenAI ripped down walls and created a simple interface to showcase the ongoing development for years.

If LL Cool J were to sum it up, he might say, “Don’t call it a [revolution]. I’ve been here for years.”

-

Apple Maps Ready for Business?

How many of you remember the great Apple Maps debacle? In the business books of major failures – Apple Maps must sit in the top 25.

I never stopped using Apple Maps, but I did bounce between Google Maps and its sister product Waze, and weirdly, along the way, Apple Maps got good. It got really good – some say it is the best US mapping platform. Maps showcase Apple’s superpower that sometimes gets overlooked – commitment and persistence. It sets its mind to things, and over painful and consistent iteration, it can turn the largest turd into a diamond.

While this is great for Apple, many small businesses could care less. If you want to build brand recognition or get more people in the door, you have relied on SEO, Google Search Ads, Social Media Marketing, or updating your Google My Business Profile. All the combined tactics have become the base layer of the small business digital marketing playbook.

Apple, however, is slowly offering products that uniquely highlight its competitive advantage and shows that it may have the plan to push into this small business market in a much bigger way.

For starters, Google’s My Business Profile is a product that has allowed them to crowd-source data and, in exchange, offer companies a boost in relevance across its search platforms. If you do not have a profile, I highly recommend you leave now and create one. Google uses this to ensure it has up-to-date information, including links to menus, open and close times, reviews, rich photos, and other data. Knowing and assuming that the info given to it is better than what it can get from crawling, it tends to feature businesses with this profile data over those who do not provide it.

Apple knew it needed to make up ground and partnered with Yelp to source this data for its Maps platform. Still, in the last year, it has become much more aggressive in providing a new tool named Business Connect that allows business owners also to offer this same type of data to Apple. Like Google’s My Profile, some evidence shows that Apple prioritizes results from customers providing this data in its internal search results using features like Spotlight.

Rumors also point to Apple beefing up its internal ad division with some trial and error – but the writing has long been on the wall that Business Connect is the building block for more extensive paid search ads in Apple Maps. Some suggest that spotlight search ads like Maps could become extensions of Apple’s App Store search tools and provide businesses with increasing ways to reach Apple’s customers.

What makes this very Apple is the focus on exploitability within its walled ecosystem. Apple’s approach to working with businesses is giving them what they know is a valuable audience – high-income Apple users – while also making it easier for its customers to access what they need without leaving the walled garden.

That’s right, in Apple’s world, these ads for business help Apple customers engage within the ecosystem they love – and we all benefit. After you find a listing on Apple Maps you can always ask questions with Apple Business Chat and feel the integration with iMessage. Are you looking for reservations? OpenTable, for years, has had deep integration with Apple allowing you to reserve directly from the Maps app or by voice with Siri. Once you make it inside, pay with ease using Apple Pay – I love the ability to pay at a restaurant using a QR code.

Like many things, Apple is playing its cards slowly, but bit by bit its game plan to target small businesses is beginning to crystalize. If you’re finding the competition super steep on Social Media, Google, and other platforms it could be time to dip a toe into Apple’s unique model and try seeing who wants what you have in its ivory-colored walls.

-

You hear me?

Since StarTrek TOS I have imagined living in the future. Talking with a computer conversationally and using our words to do anything sounds like a dream come true. Amazon Alexa’s introduction gave us that possibility with the first viable form of voice control. Even before Alexa, I’ve been a die-hard speech command lover through tools dragon text to speech for dictation and voice macros on MacOS to talk with Insteon devices and other random commands. Eject CD-Rom! Turn the light on!

Now we seem even closer to that promised land. Google Assistant, Apple’s Siri, Microsoft Cortana, Amazon Alexa, and Samsung Bixby have begun a relay race to create an AI we can talk with. It feels like we keep inching closer and closer to the promised land, and you know what? Voice is a pretty horrible way to communicate.

I find myself often tongue-tied and struggling to figure out how best to ask the simplest or most complicated of things. I would wager that sometimes I spend more time thinking about how to state a request then the time the actual request takes. But this is an issue that we can already see that ChatBots and AI are understanding and will become easier. The conversation should become more natural and feel less pained.

My thoughts have evolved on where a voice request starts and what the natural reaction should be. For example, what time does a restaurant close? Oh, great, it’s open, and I can make it. But was that my real question? If it’s closed, where else could I go? Would I prefer to walk or drive? Will I need the menu? Does it meet my dietary needs? It’s not really a voice command I need but a conversation.

Even if voice offered the info you need conversationally, would it really scratch the bigger itch? Yes, I want directions, but can I see the map first? I can judge the neighborhood much better by looking than by hearing an address. What is on the menu? Can I view it because we all know that a 1 or even 2 min read out of the menu is not what we want. Sometimes when debating music choices, I ask to hear Jazz but do you have 5 possible playlists I might like? Present me my options on an interface near me or start playing if I don’t respond. If I put down my phone or tablet voice should reengage and continue to help me. Did you want me to book those reservations? Should I send a message to people as an invite?

Our next evolution in voice is understanding it is not perfect, and that is why we continue to read and write. It is why we take pictures and record videos or audio. The words muscle memory speak to the speed interactions can offer in the physical world that voice can’t replicate. Our future is the ability to connect all of these mediums in a way that truly feels connected and seamless, and I think we all may have missed this point. Give me what I want where I want it, and when I want it.

What we all want is not a way to reach the world around us but many ways. The future is multi-sensory and tuned to the best combination of equipment at your disposal. If I’m in my bedroom, it may be my voice and my tv. In the office, my laptop (my voice may disturb others around me). On a walk or run, voice and a watch or phone. In the car, well, the car.

-

The Bank Unbundling

The big news in the finance industry is Wells Fargo, the number 1 player in the housing market, making a decision to pull back on mortgages and focus on a more profitable slice of the market. This may sound like a sign of a weakening economy, which is true, but this continues a long trend in the disruption of banking.

Banking, the business of offering checking, savings, and money market accounts, has long tipped from a profit center into a commodity business. For most banks, the actual accounts they offer work as a loss leader to move customers into other, more profitable products. In years past, it was not uncommon for a person to have an auto loan, mortgage, credit card, savings account, brokerage, and other vehicles with just one institution.

This model has been decimated by the move to best in breed. As a best-of-breed player, start-ups focus on small slivers of the whole to quickly make inroads by offering one product that outclasses others in a category. These days Robinhood is the #1 retail brokerage company. Rocket Mortgage is quickly vaulting to #1 in the mortgage and housing market. Capital One long took the crown for credit cards aimed at middle and low-income families.

Banks have responded with complicated programs and overdraft charges as they search to find some way to monetize existing customers. In many ways, it is akin to cable companies ratcheting up fees or charging for customer service. The problem is banking is table stakes these days, and banking is more of a free offering, not a core offering. Today almost any company can offer full banking services by outsourcing with BaaS companies like GreenDot. These new companies are bucking the trends of traditional banks by making banking free, offering high-interest rates, removing overdraft fees, and in many cases offering direct deposit days earlier. These new solutions are also making it easier for start-ups to offer innovative financial products with much less regulation than the old guard.

Leading this evolution are solutions like Greenlight, a kid-friendly banking, and investment platform, or robot investment platforms like Wealthfront and Betterment which offer ultra-competitive banking features at a little additional cost.

This great unbundling has placed banking in an increasingly difficult pickle. In the past, it showed its value in physical branches, accessible ATMs, and a one place fits all offering of diverse products. The reality is those products are no longer individually as competitive as they once were, and customer loyalty is at an all-time low.

To make things worse, a new bundling is beginning through acquisition that is starting to reshape what we expect in our future institutions. For example, Rocket Mortgage is on a tear with acquisitions that have expanded its footprint as it hopes to increase revenue from its consumers by showing its hand at other services like auto loans and personal loans, all while Robinhood recently began offering IRA and retirement products.

Every major bank is sitting in the mirror, asking how it will compete 5 years from now, and the writing is very much on the wall. They must adapt, acquire, or ultimately face irrelevance.

-

CES 2023 Recap

I last attended CES in 2020; shortly after, doors closed worldwide. After a two-year hiatus, I had no idea what to expect and feared the conference would lack the thrill it used to have. I must say CES did not disappoint and Mindgrub’s return to Vegas was a thrill we will not soon miss.

CES is a reminder that technology and innovation require painstaking development. Year after year, we see new things, but the story is the evolution you witness as crazy ideas evolve from conference to conference. Ideas begin to settle, companies emerge as front runners, and ideas coalesce into a solid direction.

Trends I witnessed include growth in technology around assistive devices and accessibility, the evolution of all sizes of electronic vehicles and autonomous vehicles, the maturity of robots, and the development in technology that supports and extends VR.

Accessibility Tech at CES

Accessibility technology has been in the limelight lately, and some of the possibilities have offered an opportunity to extend the independence of all types of people. Two product standouts from this year are AR glasses and a sensor to help those with limited eyesight get around.

My co-worker Shalisa demoed the AR glasses, a device that offers real-time close captioning. With this device, folks who suffer from hearing loss can still engage in conversations and fully participate in everyday discussions.

The Ara from Strap Tech combined much of the same technology in our cell phones and autonomous cars to create a system for blind or visually impaired users to navigate everyday environments. It uses LIDAR to create the same SLAM data to pass on inputs as vibrations to its user.

I was, however, surprised at the lack of hearing aids after policy changes opened the door for over-the-shelf assistive hearing devices. Next year I’m hopeful we will see companies look at this new category.

Electronic and Autonomous Vehicles

The move to electronic vehicles is no surprise, but the sheer amount of companies investing in EVs was much larger than I expected. Tons of upstarts showed off concept vehicles that felt like the future, and some of these concepts definitely piqued my interest.

Lightyear’s solar-powered car with an electronic drive train was a marvel. Waymo showcased its self-driving cars with an expanding fleet that included a custom Freightliner. USPS was also on sight with its questionable-looking and controversial new EV platform.

Littering the vehicle mobility areas of CES were scores of buses, shuttles, and other autonomous transport systems designed to move people around urban environments without a driver.

The same tech used for bigger devices also showed up in various miniature transportation devices. One company showcased a chassis perfect for a vehicle delivering packages or food on a route.

And as we get even smaller, multiple companies introduced new versions of electronic lawnmowers or U-SAFE water drones that can save people from drowning in the ocean.

Virtual Reality, Augmented Reality, and the Metaverse

Companies continue to search for viable commercial uses, and you could see that on display at CES with the increase in hardware to support the VR ecosystem.

Two great booths for this include bHaptics and haptx which offered new generations of haptic VR devices. I wish I had the chance to try it, but the second reviews of the Haptx Gloves G1 system were very exciting.

Some VR ideas focused on live air and combat training that seemed perfect for some of the work by our clients, such as TAK.

One booth I sadly did not sign up for in time was to check out Magic leap’s glasses. A solid line surrounded the booths, and sign-ups to try out the glasses seemed to disappear fairly quickly every morning.

To cap off our VR venture at CES, the Mindgrub team signed up for the Deadwood Valley experience at Sandbox VR. Having used many VR devices, I did not know what to expect, especially how the group mechanics might work.

Other things

CES was filled with so many other wonderful highlights. As a conference, you have to love the whimsical wonder of it all. The crazy ideas sitting on full display for all to witness and try.

The Shiftall Mutalk is a device that may draw comparisons to a different type of voice suppression device. That said, the thing really works! I had a full conversation in front of my co-workers without anyone hearing a peep. I can see the utility for those who need to have a private conversation in a public space.

I’m not sure how to describe the joy of pruning a tattoo right on your body, but I really love Prinker’s body printers. I put in an order for one of these and look forward to the chance to print things on my kid’s faces when they fall asleep.

But the thing that gave me the best laugh was the u-scan from Withings. I love Withing’s products and will buy this for my own personal bathroom – but who knew the next step in smart home health would be a urine analyzer? My favorite feature of this device is its ability to recognize family members by their unique urine stream. Looking forward to making that one of the MFA options for Mindgrub’s SSO.

Did anyone know digital wind instruments exist as a product? I wish I had a chance to try the product in person, as the idea of one device that can mimic many wind instruments, like a keyboard, is amazing.

One surprisingly sparse area was the 3D printing section. Outside of FormLabs, I did not notice another 3D printing company, but it did not matter as they came with a few cool goodies. First was the introduction of Form Auto, a printer capable of print g 24-7 without downtime. From personal experience, this is a big leap and opens the door to more Kinkos-style printing facilities. FormLabs also feature the Hasbro Pulse Selfie Series toys, which scan your face and allow you to 3D print a custom toy. I’m looking forward to holding my personalized Black Panther toy in my hands.

-

Did Southwest’s Tech Fail?

Many news sources cite Southwest’s lack of investment in technology at its scale. I’m an outsider here, so I can only speculate how this happened, but I find the finger-pointing at technology to be a bit like a Jedi mind trick.

What makes Southwest Airlines so unique is the back-of-napkin concept they proudly celebrate during drink service. Southwest runs a continuous loop model that moves a single plane and multiple flight crew across the US by migrating the team from city to city until a plane fully loops around to its start destination. If you fly Southwest often, as I do, it is not uncommon for planes to stop at intermediate destinations with a continued end stop. Usually, a plane loops every 2-4 days, and you can easily find that if you fly a regular schedule, you’re pretty likely to end up with the same plane or crew.

Nearly every other traditional airline of scale has functioned on the hub and spoke model. Airplanes venture out from a core hub, like Atlanta, and ultimately the majority of airplanes cycle between these hubs that same day. Growth for an airline requires building out infrastructure to add additional hubs around the country or world.

As you can imagine, spoke and hub reward geographic focus – so airlines with this model tend to favor destinations within short proximity of their hub(s). You can focus on one core operational city to house the majority of flight crews, giving you a greater concentration of people you can tap when a person is sick, or you need to add an unexpected route or plane into the mix.

Southwest’s model strays greatly from this and creates an amazingly efficient system with high reward and high risk. In the Southwest model crews can live anywhere, but the system can stage them to the desired destinations to serve a route. Planes no longer needing to cycle home to a hub can venture out for days before returning to their “home” destination.

While hub and spoke airlines have required larger and larger planes to expand the reach of its hubs, Southwest has grown on smaller plans making many, many more stops across a larger loop. This model is amazing and one of Southwest’s core differences – with this approach, it can offer extremely competitive prices and reach destinations that are simply unaffordable for other airlines. So yes, this is how the system works and by design. However, it also means that one airplane needing unexpected service or a call out from the flight crew can cause a cascading delay as it interrupts a plane’s next 2-4 days in its cycle. Something that you can refer to as a cascading delay.

So my read. Southwest’s unique scheduling structure has required some level of custom development or frankestiening of technology solutions to do what they need to do. These systems also operate at an acceptable level of risk or built-in slack. As they are the only airline doing this at such scale, it has become riskier to manage these operational needs and harder for humans to intervene when cascading issues occur.

This recent week introduced the triple whammy. Flight crew shortages due to unexpectedly high volumes of sick pilots and flight attendants; Plane delays and cancellations stemming from one of the largest and most nationwide weather disruptions in recent history; and, as mentioned earlier, a model that, when bent too much, becomes unwieldy and nearly impossible to manage or fix manually. At this point, you have two choices manually fix the system (which has grown so complex it would take weeks to unwind) or hit reset, restage all planes and crew and start the cycle a new.

The reset was the quickest option, and that’s what Southwest did, and I doubt we experience another blip like this again. After all, the system operated like it should and failed when it reached outside of the parameters it was built to handle.

If I’m right what happens next is regulation or self policing that will mean an increase in the slack the system could handle.

So is the technology problem? I doubt it. Southwest got caught running its system way leaner than it could support, and it failed. Can tech make it better and help them look at ways to self-heal when these issues happen next time? Prob. But the true question is when is lean too lean for an airline.

Let’s see what happens.

-

Secure Software

Reading about Anker’s recent security issues has been interesting. In reading I came across this great comment on The Verge’s article :

“why did this happen at all when Anker said these cameras were exclusively local and end-to-end encrypted?” and “why did it first lie and then delete those promises when we asked those questions?”

Occam’s razor.As a software developer, I can tell you with about 95% certainty what happened. The Anker software team screwed up and didn’t know about this security hole. They didn’t test this scenario. They just didn’t know. They probably don’t have enough security engineers and checks. It’s probably not a huge company.

As for the lies, the Anker PR/marketing people you talked to have no clue. They are probably just fed information from whoever in the company. They probably didn’t “lie”. Maybe the engineers were still investigating and weren’t sure so they told them that “no chance there’s a security hole”. Maybe a dev manager wanted to cover his/her ass and said, “there’s no way we didn’t test this”. Whatever the case, there’s a gap between reality (i.e. source code) and how the product that the marketing team is responsible for selling (welcome to software development!).

So yes… it’s fun to think of conspiracy theories like the Chinese government ordering Anker to leave a backdoor so that it could keep an eye on the front porch of Americans… but Occam’s razor chalks this up as careless software development and unresponsive marketing/PR(likely both a result of being a small’ish company).

This. Yes, this right here is in my own personal belief the true reality of the situation.

Mindgrub is not a huge company, but we spend a lot of time focused on the processes we need to create secure and scalable applications. We manage to do this because we are an engineering team of scale, and that requires us to set rules from branching strategy to mature continuous integration policies that our engineers can embrace as they move from project to project.

These processes are pretty good but nowhere near perfect, and I can tell you that the way we build applications is light years beyond many organizations I have worked with in the past.

Why? Because many best practices collapse when not run at scale. A singular developer can not peer review his or her own code. When you take any 2-4 person internal development shop 95% of the time you find cowboy coding happening on a regular basis. As all humans, we all make mistakes regardless of how amazing we may be as a singular developer. I can’t begin to tell you how often under a basic audit of code, infrastructure, or process that it becomes immediately obvious that this approach has created a technical debt of huge magnitudes.

What is more common in almost every one of these situations is a rift between the appointed chief engineer(s) and other teams like marketing and sales. Terms you may hear are this is what the customer really wants, we had to build it this way, or if you only understood how it worked.

Keep an eye out friends.

-

What Security?

Every year all Mindgrub employees are required to complete our annual security training. This year we switched it up and moved to the well-received KnowBe4 training curriculum.

Watching and completing the ~45 min eLearning session seemed a bit surreal this holiday season. After all, LastPass completely failed, a house representative-elect lied about everything, and Anker was caught lying about its local-only cameras actually connecting to the cloud. All this without mentioning the many issues still circulating FTX being hacked and its founder running a billion-dollar company with little to know processes in place.

It really makes you stop and realize how hard it increasingly is to keep yourself safe. It’s one thing when we know we need to protect ourselves from those we might label as unsavory, but it becomes much more difficult to protect ourselves from the entities that we expect to protect us.

When I arrived at Mindgrub we made heavy use of LastPass. While we liked the tool, we found it lacked certain enterprise features we wanted and migrated to a different enterprise password manager. That tool is the password manager that, combined with our security processes, helps us limit access to only those who need it while also preventing team members from sharing passwords as text in tools like Slack or email.

Having a tool like LastPass hacked to a point that so many are at the mercy of a master password that now is a gatekeeper that hopefully can survive brute force attacks is a pill that is difficult to swallow. LastPass’s customers did everything right and trusted a company whose charter is securing your data better than your own.

The thing is, LastPass is just the most recent of these types of companies to let us down. Y’all remember Equifax, YouTube, Facebook, Marriott, Verizon, …? What is crazy is this is the list we know, and having spent decades working with security specialists, I can absolutely promise you that a very small percentage of companies ever publicly report most security incidents.

What we are facing is the reality that security is a team sport, and heck, maybe a village or country-wide sport. You or I can do everything correctly, however, as has been the case our entire lives, we all have dependencies on people, products, businesses, or governments, and we are all susceptible to the weakest link in this list. Just one chink in our combined armor, and the impacts are tremendous.

So consider this a reminder for all of us to keep being serious about the importance of security in our lives. Be diligent and make sure that we hold our IT and development teams to the security standards we expect of ourselves. Are you a developer? Find a security framework and make sure you and your team follow it.

-

Migrating

My friend recently made the big shift from Android to the iPhone. As I celebrated her finally joining the blue text messages club and her ability to join family albums, use share play, and so many other iOS features, we had to start with the very first step. Migration.

I’m not the average Apple customer. As a developer and tech enthusiast, I read about features before they get released, tune in to every announcement, and regularly run beta versions of iOS. I typically keep a Google Pixel phone around to keep up on Android trends and test applications we build for our customers, but I have never used Apple’s migration application, so I must admit that I was giddy with excitement to see just how well it truly works.

The process could not have been simpler. The new iPhone presented us with a QR code we scanned with the Android phone to download the Move to iOS app from the Google Play store. Once downloaded, it asked us for a one-time code that appeared on the iPhone. After that, we received a few warnings about the access the move to iOS app requested but other than that, it was a click and wait. The download began at nearly 3 hours but, in reality, took closer to 30 minutes. Once migrated, we chucked the Android phone in a corner (joking!!) and completed the iOS setup process. If you have upgraded iOS on an iPhone you know the standard options like setting up Siri and Face ID.

The migration was pretty remarkable in how deeply it copied everything over. It moved over messages, loaded contacts, preconfigured email, calendar, photos, pre-downloaded all of her apps, and even managed to maintain the ring tones and text sounds from her Android phone. She was literally able to pick up the phone and begin using it without skipping a beat.

Once the phone was up and running, I sat, hoping to see how she experienced the dynamics of a much cleaner and easier-to-use phone.

I tried my best to avoid pressing buttons and jumping in – but I did way too much of that. But what I witnessed surprised me – decades of software updates had made many features that felt normal to me unwelcoming or complex. So much of what I wondered to be intuitive really came from my years and years of using iOS devices.Features like swiping to search or control center become things that imagine many users may never really discover. I notice this same dynamic often at Mindgrub when new employees moved from Windows to macOS for the first time. I often take screenshots or use Quick Look to view previews while searching for a particular file.

Many of these things are both easy and so so hard. As iOS and macOS have grown layers and layers have caked on top of each other, and the layers have made us forget that things are no longer as easy or intuitive as they once were.

Migrating was a fascinating reminder to stop and think of the long journey all software takes and remind ourselves of the difference between common knowledge or common intuitiveness and learned intuitiveness.

-

It Wasn’t Me

Arstechnica has an amazing piece on the dystopian possibilities of AI images and deep fakes. As the article notes – deepfakes have been a reality for years, but AI takes what was a skill and makes the process so simple that anyone can channel its uses for good (or primarily bad). It opens up a world where the art of disruption is limited to our ability to capture a picture in our imagination and transcribe it as words.

I can remember joking at how insane a song like Shaggy’s It Wasn’t me, when in reality, maybe Shaggy was a sage describing our pending deepfake future. For those thinking the computers won’t fool me try out this quiz. Times are early, and fake images are becoming harder and harder to pick out.

-

Google Analytics 4

Google Analytics 4, or GA4, is a reimagining of the analytics platform for a world that is post page views. Many of us still think of web traffic in older terms like hits, pages, and sessions – however, the mobile space and rich internet applications have changed how the web works.

Imagine Instagram and think of a typical interaction. The infinite scroll removes pagination and page views, exchanges can be tracked by the length you pause over an image or clip, and taps – while they happen – are not the core way most users interact.

Even the concept of user sessions can seem weird when we track a popular site like Reddit, where users visit multiple times a day. All of these changes have forced us to step back and rethink how we should analyze our traffic data – and, better yet, overlap streams of data to compare websites to others, such as mobile traffic. Some Reddit users may move from the web when using a desktop to the mobile application on the run.

GA4, for the first time, allows Google analytics to pull in data streams from multiple sources and generate reports across many distinct platforms. Do you offer an e-commerce experience over the web, mobile, and tablet? We can create data streams to pull data from each source, offering a view of engagement that is more holistic than before. Goal funnels that allowed you to see abandoned cart rates now can let you compare those funnels across all platforms and see trends.

So how does this all work?

At the core of GA4 is a new focus on data streams, events, insights, and reports, all combined with a querying system that embraces the ideas of reporting platforms like PowerBI or Tableau.

Data Streams

In the olden days, each Google analytics account represented a website. GA4 has chosen to embrace multiple data sources and allow those data sources to live across platforms. This is excellent for a company with various brands like Gap. In this new world Gap, Old Navy and Banana Republic could overlay data streams to better understand KPIs and measure trends across sibling sites.

As I described above, mixing mediums provides even more power. For folks like many of our clients with mobile and web experiences, analytics have lived in two different and distinct platforms: firebase and Google analytics. Firebase provides analytics targeted to a mobile experience. As such, they tend to focus on concepts around events: think taps or swipes. They also focus on time and intent, as many screens on a mobile application depend on how much time one has focused.

GA4 allows us to pull all these platforms together using data streams, providing a single source to view traffic and analytics across your entire portfolio of experiences.

Events

Once upon a time, we considered interactions at a page level to give us a view of a user interacting with our content, but that is no longer granular enough. In GA4, events like JQuery or CSS selectors allow you to drill into components of a page to listen for particular types of interactions with content. GA4 also allows developers to use custom code to create unique events they may want to track.

Out of the box, google analytics helps us do this by automatically collecting tons of events. It also provides an easy interface to track enhanced events such as scrolling. Unlike automatic events, enhanced events are specific to how your website functions. For example, a blog that offers an infinite scroll to see new articles vs. pagination could use a scroll event to capture the equivalent of a new page view. Some websites may also find value in a hover action, something that may autoplay a video or animation.

You can also create custom events that tie unique interactions and track those interactions across a multitude of devices. If you look at recommended events, one can easily imagine monitoring the purchase of a product or a login interaction across mobile and the web. These events also take parameters that can capture additional insights, such as how a user logged in – did they use Google, Facebook, or Apple to log in vs. a traditional username and password?

A developer can also record custom events if the combination of automatic, enhanced, and recommended events does not cover your specific needs.

Insights

Google uses an immense amount of data to provide insights using artificial intelligence and machine learning. While how it works is a large black box, we do know that most websites use Google Analytics to track traffic and user interactions. This treasure trove of data allows Google to learn more about how our customers interact with the web and your competing website than any other company. It also can use this data to give us new trends or correlations that we may otherwise miss.

These correlations are interesting, but it can be hard to make them actionable. For example, analytics might notice that iPad users have a higher likely hood of completing a purchase.

I avoid using most company websites to complete commerce transactions and prefer a mobile device and an app. The app experience of using Touch or Face ID to unlock and easily store card options makes it much quicker. It’s not abnormal for me to shop on one device and complete a transaction on another. Analytics insights can help you find these trends.

You can also create custom insights to see correlations quickly. For example, if revenue is up in the last hour or if conversions are strong in the state of New York.

Reports

To complete this move, Google Analytics hopes that we reconsider how we view a website or mobile app to determine success. To do this, it is eliminating many of the default reports and dashboards we have come to expect. Gone are views that reference antiquated terms like page views and instead, a powerful custom reporting tool that allows you to build reports that better reflect the KPIs you use to measure success.

Migrating

If you, like many, already have the older version of Google Analytics, migrating can be pretty easy and painless. First, off the existing google tag you installed will work for both Universal Analytics and the new GA4. Once done and associated as a data stream GA4 will also attempt to analyze site traffic to determine events and provide information it thinks you will find helpful. Of course, you can also customize events as discussed earlier to better track specific interactions.

The biggest concern is that the data structures that underly GA4 is very, very different than the old GA. This means that you can not move previous data over. In this sense, it is less of a migration than a new and different GA4 setup.

If this historical data is valuable or something you hope to refer to in the future, I recommend exporting GA data before the planned July cut-off.

-

The Paywall

I share article excerpts with friends and family frequently. Sometimes it’s something fun like a Buzzfeed listicle, but most times, it’s an article relevant to shared interests or recent conversations. Paywalls almost always put a huge wrench in this, to the point that a good friend asked if I could share a synopsis or quote since they can not access the greater article.

I understand why paywalls exist and the importance of paying journalists what they are worth, but paywalls continue to feel like the wrong solution. I read various publications, many filled with ads, and subscribing to them is not in my budget. Some of my favorite sources of content include places that, regardless of how great an article may be, I avoid sharing or referencing because I know the burden I’m passing on to others.As I’m reminded of a great piece by John Gruber what I continue to see is the popular media having a smaller and smaller presence and relevance as they lock more content behind paywalls and inadvertently increase the amount of independent media sources. This is not on its own wrong, but as popular voices become inaccessible, they can’t speak truth to lies spread by more viral and independent sources.

I would easily argue that paywalls and preventing access to content are behind the rise of false viral content being spread on social media. When the source is not available, you are limited to finding a new voice and message to trust.

In addition to trust, paywalls must also fight subscription burnout. I’m from New Orleans and have lived in many cities beyond my hometown. As such, I struggle with the choices on which local news authority to subscribe to and commonly find myself disregarding articles shared by my parents or friends because I looked at my 3 articles that month for a daily that is no longer a daily for me. Like all subscriptions these days, I need to choose the options that bring me the most value, and sadly that limits me and prevents me from reading all the news sources I so wish I had regular access to.

This situation baffles me because many governments hope to save our traditional media with fines targeted at aggregators and social networks like Facebook. Facebook is right. Why should they pay for this content? Others will duplicate, replicate, or write their own content and continue to diminish the value of the original source or quickly supplant them with a more modern Instagram or TikTok view of the media.

In this post alone, I passed up several publications links because those would require you to subscribe to view. Something has to change, but time and time again, we have found that locking up content and preventing access is a recipe for decline. After all, when was Howard Stern last relevant?